Following research conducted by a colleague of mine [1] at CyberArk Labs, I better understood NVMe-oF/TCP. This kernel subsystem exposes INET socket(s), which can be a fruitful attack surface for attackers. We decided to continue in this direction and explore the subsystem even deeper.

To do so, we took advantage of the widely used kernel fuzzer, syzkaller [2], mainly because it can be extended to support new subsystems using syzkaller’s syscall descriptions.

This blog post explores our research methodology, milestones, and, ultimately, the results.

TL; DR

Using syzkaller, with our added support for the NVMe-oF/TCP subsystem, we found five new vulnerabilities.

Fuzzing The Linux Kernel

Why?

I always like to start with the “whys.” In this case, the answer is somewhat straightforward: The Linux kernel is probably the most used kernel in today’s cloud computing and IoT world. Finding bugs and vulnerabilities within the kernel will result in considerable impact from both an attack and defense perspective.

How?

A commonly used technique in recent years is fuzzing the research’s target – Linux kernel in our case. But what should we do when the targeted codebase is vast and complex? The answer is using an already widely used fuzzer made exactly for this purpose, syzkaller.

What?

An important note for the rest of this blog is that you should already be familiar with the core idea of a fuzzer, which means you should already know the terms fuzzing[3], coverage[4], and corpus[5].

According to the project’s official repository, syzkaller[6] is a coverage-guided kernel fuzzer. This means that syzkaller will create new inputs (mutated executable programs) to test, and if the input reaches a new section of the tested code, it will save the input in the corpus. The saved inputs will be mutated again in the search for newly explored code blocks. Another part of the input creation is the syscall descriptions, which we will discuss later.

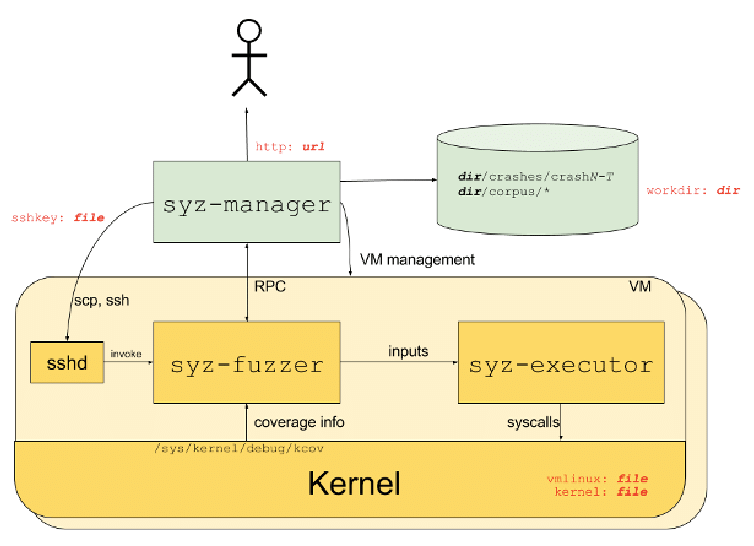

Above (Figure 1) is a schematic taken from the syzkaller repository. It shows an abstraction of the fuzzing process and the three major parts of the fuzzer.

The syz-manager is the main component of syzkaller that controls the flow of the fuzzing process. It creates, monitors, and restarts VMs for the fuzzing. Also, it starts the syz-fuzzer process inside of each VM. It is also responsible for managing the corpus.

The syz-fuzzer process is responsible for input generation, mutation, and minimization. Another vital responsibility of this component is sending the coverage information back to the manager and the inputs to the syz-executor for it to execute.

The syz-executor process takes the input program from syz-fuzzer, executes it, and sends the result back to syz-fuzzer using shared memory.

Syzkaller Syscalls Description

As mentioned before, the syz-fuzzer is the component that creates new inputs. But how does it construct those inputs? For that, the syzkaller uses the concept of syscall description, a variation of grammar-based input generation configuration.

Syscall descriptions, a type of descriptive syntax written in a pseudo-formal grammar language called syzlang, is the language syntax syzkaller uses as grammar.. Using those descriptions, the fuzzer knows how each syscall should look in terms of name, arguments, and return type. syz-fuzzer takes this grammar information from the descriptions and generates input programs accordingly.

syzlang Syntax

Describing a syscall

The syntax of the syscall descriptions might seem intimidating at first, but it is straightforward to grasp.

Let’s start with an example of the open(2) syscall. The syscall definition [7] is:

SYSCALL_DEFINE3 (open, const char __user *, filename, int, flags, umode_t, mode)

From that, we can see that the syscall open gets three arguments of the types const char *, int, and umode_t (which is an alias for unsigned short).

After reading the documentation of syzkaller on how the syntax of syscall description [8] works, we can see that a syscall description grammar looks as follows:

syscallname "(" [arg ["," arg]*] ")" [type] ["(" attribute* ")"]

Let’s break it down:

- syscallname – The syscall name, in our case “open”

- Then we add a “(“, indicating the start of the syscall’s arguments part

- For every argument, we indicate the name of the argument (arg in the example above), followed by a blank space, followed by the argument type

- A comma separates arguments”,”

- A closing parenthesis ”)” indicates the end of the parameters section of the syscall

- If the syscall has a return value, we indicate the type of the returned value after the closing parenthesis, followed by a blank space

- For now, we will not discuss the “attribute” at the end of the description

Following those rules, the open(2) syscall description looks like this:

open(file filename, flags flags[open_flags], mode flags[open_mode]) fd

| | | | | |

V | V V | V

syscall | argument 1 special | return value

name | type "flags" | of type "fd"

V type |

argument 1 V

name sub-type

of flags

(enum)

As you can see above, there are also some special types, such as flags and fd. For more information, please read the documentation [11], as this is out of the scope of this blog.

Breaking Down Struct Descriptions

This time, we’ll start with the syntax for describing structs. We will use the syntax explanation from syzkaller docs:

structname "{" "\\n"

(fieldname type ("(" fieldattribute* ")")? "\\n")+

"}" ("[" attribute* "]")?

- Start with the name of the struct

- To open the fields section, we add a curly bracket – “{”

- Each field is written in a line of its own

- Starting with the field name

- Followed by a blank space

- Eventually, the field type

- We end the struct description with a closing curly bracket – “}”

As we get closer to the NVMe description, let’s take an example of a struct defined for the NVMe driver.

Following is struct nvme_format_cmd, as , in the kernel sources.

struct nvme_format_cmd {

__u8 opcode;

__u8 flags;

__u16 command_id;

__le32 nsid;

__u64 rsvd2[4];

__le32 cdw10;

__u32 rsvd11[5];

};

In syzlang, it will look like this:

nvme_format_cmd {

opcode int8

flags int8

command_id int16

nsid int32

rsvd2 array[int64, 4]

cdw10 int32

rsvd11 array[int32, 5]

}

- We start with the struct name – nvme_format_cmd

- The first field, opcode, is defined as __u8 in the sources. The corresponding type for it in syzlang is int8, which is an integer of 8 bits.

- In the fourth and sixth fields, you can see that we use int32, whereas, in the sources, the field type is an explicit little-endian integer of 32 bits. That is because syzkaller doesn’t mind if the type is big or little endian, as it just needs to know how many bits to generate.

- Another thing to mention is the array type. As you probably guessed, it indicates that the type is an array. Inside the rectangular brackets, we tell Syzkaller how to construct the array. In the case of the last field, we tell syzkaller that the array comprises five elements, each of which is a 32-bit integer.

NVMe-oF/TCP Subsystem

NVMe

NVMe (Non-Volatile Memory Express) is a protocol used to control PCI devices such as SSDs. Over the years, a new kind of usage was added to the NVMe specification, NVMe-oF/TCP, a protocol that allows remote access to NVMe storage devices over TCP/IP networks.

NVMe-oF/TCP

One of the key uses of NVMe-oF/TCP is in data centers and cloud environments. By leveraging the high-performance and low-latency characteristics of NVMe-based storage devices, NVMe-oF/TCP allows for the consolidation and virtualization of storage resources. This enables the efficient utilization of storage infrastructure and improves the overall performance of storage systems.

NVMe-oF/TCP can be utilized in data centers, edge computing and remote office environments. By extending its benefits over wide-area networks, centralized storage resources can be accessed remotely, enabling efficient data sharing and collaboration.

Driving an NVMe-oF/TCP in a Linux Machine

To enable the NVMe-oF/TCP feature in Linux, the kernel uses a driver called “nvme-tcp” that provides the necessary functionality to establish and manage NVMe-oF/TCP connections. This allows Linux systems to communicate with NVMe storage devices over TCP/IP networks. The Linux community actively maintains and develops the driver, ensuring compatibility and support for the latest advancements in NVMe-oF/TCP technology.

Why Does It Matter to Us?

The utilization of NVMe-oF/TCP in the Linux kernel exposes a listening socket (or sockets) and handles any received packet by itself without any userspace process involved. Therefore, if a malicious user finds and exploits a vulnerability there, it could affect the machine remotely, with the potential of the highest impact.

Syzkaller and Subsystems

To make syzkaller capable of fuzzing the Linux kernel, the developers of the fuzzer decided to go with a modular approach, giving the option to add subsystem support, one at a time.

In Linux, a subsystem refers to a component within the Linux kernel responsible for a specific functionality or set of functionalities.

To add such subsystem support for syzkaller, one should create a description file by the <new_description_name>.txt and save it to sys/linux/<new_description_name>.txt).

NVMe-oF/TCP Description

Let’s take a brief look at a significant part of the description:

nvme_of_msg

sendto$inet_nvme_pdu(fd nvme_sock, pdu ptr[in, nvme_tcp_pdu], len len[pdu], f const[0], addr const[0], addrlen const[0]) # ---> 1

nvme_tcp_pdu [

icreq nvme_tcp_icreq_pdu

icresp nvme_tcp_icresp_pdu

cmd nvme_tcp_cmd_pdu

rsp nvme_tcp_rsp_pdu

r2t nvme_tcp_r2t_pdu

data nvme_tcp_data_pdu # ---> 2

]

nvme_tcp_data_pdu { # ---> 3

hdr nvme_tcp_hdr_data_pdu_union

command_id int16

ttag int16

data_offset int32

data_length int32

rsvd array[int8, 4]

} [size[NVME_TCP_DATA_PDU_SIZE]]

nvme_tcp_hdr_data_pdu_union [ # ---> 4

nvme_tcp_hdr_data_pdu_h2c nvme_tcp_hdr_t_const[nvme_tcp_h2c_data, const[NVME_TCP_DATA_PDU_SIZE, int8]] # ---> 4.1

nvme_tcp_hdr_data_pdu_h2c_hdigest nvme_tcp_hdr_t_const_extra[nvme_tcp_h2c_data, const[NVME_TCP_DATA_PDU_SIZE, int8], const[NVME_TCP_HDR_H2C_DIGEST_PDO_HDIGEST, int8]]

nvme_tcp_hdr_data_pdu_h2c_no_hdigest nvme_tcp_hdr_t_const_extra[nvme_tcp_h2c_data, const[NVME_TCP_DATA_PDU_SIZE, int8], const[NVME_TCP_HDR_H2C_DIGEST_PDO_NO_HDIGEST, int8]]

nvme_tcp_hdr_data_pdu_c2h nvme_tcp_hdr_t_const[nvme_tcp_c2h_data, const[0, int8]]

]

type nvme_tcp_hdr_t_const[PDU_TYPE, HLEN_SIZE] { # 5

type const[PDU_TYPE, int8]

flags flags[nvme_tcp_pdu_flags, int8]

hlen const[HLEN_SIZE, int8]

pdo int8

plen int32

} [packed]

nvme_tcp_pdu_flags = NVME_TCP_F_HDGST, NVME_TCP_F_DDGST, NVME_TCP_F_DATA_LAST, NVME_TCP_F_DATA_SUCCESS # 7

1. In the first part, we can see the syscall description of the sendto(2) Taking a closer look, we can see the “$” symbol, which means this is a variant. A variant is a “regular” syscall but with a specific cause. In our example, sendto$inet_nvme_pdu is a variant of sendto that will always send a message to a nvme_sock, with a payload that will always be of type nvme_tcp_pdu.

2. We didn’t discuss unions in this blog, but all you need to know is that syzkaller will pick a random member of the union of type nvme_tcp_pdu to send in the sendto$inet_nvme_pdu In this case, we’ll take a closer look at the union’s data field.

3. nvme_tcp_data_pdu is a struct that defines the actual PDU (protocol data unit) that will be sent as a payload in our TCP packet. The interesting part of this struct is the hdr field, which again encapsulates another union.

4. In this union, we define the PDU headers available to us. Those four headers are taken from the NVMe-oF/TCP specification document[9].

4.1. Each union field here is derived from something called a Type Template (Yet again, I recommend reading the syzkaller docs regarding syzlang syntax). Those templates act like regular structs in syzlang; however, templates allow us to create struct definitions with a few changes of each struct definition’s fields. In our example, we use a template called nvme_tcp_hdr_t_const, which represents a general NVMe-oF/TCP header, and with given parameters, create different structs such as nvme_tcp_hdr_data_pdu_h2c and nvme_tcp_hdr_data_pdu_c2h.

5. In the definition of type nvme_tcp_hdr_t_const[PDU_TYPE, HLEN_SIZE], we define two “parameters” by the names PDU_TYPE and HLEN_SIZE. Each time a struct uses the template type nvme_tcp_hdr_t_const, it needs to pass those parameters that will expand, like C macros, into the places defined. In this example, the field type will be a constant of type int8 and always have the value of PDU_TYPE. nvme_tcp_hdr_data_pdu_h2c in the case discussed above, defined as a nvme_tcp_hdr_t_const, and its type field will be a constant of nvme_tcp_h2c_data. DISCLAIMER: nvme_tcp_h2c_data value will be determined while compiling syzkaller with the added subsystem.

Connecting a Socket to NVMe

To send those TCP messages, we first need to open a socket. However, syzkaller uses sandboxed environments for each input. These sandboxed environments are always placed inside a new network namespace [10], which means that even if we open a socket, it will not be able to later connect to the NVMe-oF/TCP socket that listens on the init network namespace (the main network namespace in Linux). To solve this problem, we need to open a socket in the init net namespace and send our packets from it.

I will not get much into this subject, as it is a bit out of scope here. Still, to create such a socket, we will need to ensure that for every input being generated by syzkaller, the socket(), syscall should be wrapped with setns() to change the current net namespace to init net namespace before creation and return to the sandboxed net namespace after the creation. A process that will take too much effort.

Pseudo-Syscall to Initialize Connection to NVMe

To overcome this issue, instead of using setns(), I just recompiled syzkaller with a new type of sandbox I created that just skips the creation of a new net namespace. However, after talking with syzkaller developers, they suggested using a different kind of approach called pseudo-syscalls.

Besides regular syscalls, syzkaller also supports adding pseudo-syscalls. Pseudo-syscalls are functions defined in the syz-executor sources that act like syscalls. That means that whenever syzkaller generates new input or mutates one, it can use the pseudo-syscall as part of the generated program.

Luckily, there is already a pseudo-syscall [] in syzkaller’s sources, called syz_init_net_socket(), that does exactly what we want – creates a new socket in the init net namespace. But yet again, after trying to use it, I discovered that this solution does not fit my needs. syz_init_net_socket() only allows some types of socket to be created in the init net namespace, and none of those sockets is a socket(AF_INET, SOCK_STREAM, 0) type. This is to prevent syzkaller from creating arbitrary sockets in the init net namespace that might cause problems.

At this point, I had no other option but to add a new pseudo-syscall, designed just to be a solution for NVMe-oF/TCP. This new pseudo-syscall called syz_socket_connect_nvme_tcp(), uses the same logic from syz_init_net_socket() and changes the current net namespace to the init net namespace , creates the socket and returns to the original net namespace, but doing all this without checking the type of socket. To ensure this new syz_socket_connect_nvme_tcp() will be used only for NVMe-oF/TCP connections, I hardcoded the connect operation to use a fixed address of localhost:4420 for the connection, which is the default port for NVMe-oF/TCP. The function eventually returns the socket file descriptor for later use.

static long syz_socket_connect_nvme_tcp()

{

struct sockaddr_in nvme_local_address;

int netns = open("/proc/self/ns/net", O_RDONLY);

if (netns == -1)

return netns;

if (setns(kInitNetNsFd, 0)) // Setting netns to init netns

return -1;

int sock = syscall(__NR_socket, AF_INET, SOCK_STREAM, 0x0);

int err = errno;

if (setns(netns, 0))

fail("setns(netns) failed");

close(netns);

errno = err;

// We only connect to an NVMe-oF/TCP server on 127.0.0.1:4420

nvme_local_address.sin_family = AF_INET;

nvme_local_address.sin_port = htobe16(4420);

nvme_local_address.sin_addr.s_addr = htobe32(0x7f000001);

err = syscall(__NR_connect, sock, &nvme_local_address, sizeof(nvme_local_address));

if (err != 0) {

close(sock);

return -1;

}

return sock;

}

With this new pseudo-syscall, we can now ask syzkaller not to use the socket() and connect() syscalls and instead use syz_socket_connect_nvme_tcp() to create the socket in the init network namespace, connect to NVMe-oF/TCP, and then return the socket file descriptor to be used later with sendto().

Linux Kernel Coverage – KCOV

KCOV, which stands for Kernel COVerage, is a Linux kernel subsystem designed to collect coverage information for running tasks. In other words, if KCOV is enabled, the kernel gathers information about the kernel code executed during a task runtime and sends the information to user space via /sys/kernel/debug/kcov.

How Does It Work – In A Nutshell

While building the kernel, the compiler adds a __sanitizer_cov_trace_pc call into every basic block of the kernel code. Each time a basic block is executed, the kernel saves the address of the return address from the executed __sanitizer_cov_trace_pc. It sends it to userspace using the /sys/kernel/debug/kcov file, so users can use this coverage information for debugging or fuzzing.

If you want to read more about KCOV and how to use it as a user who wants to collect the data, read the documentation [12].

Remote KCOV

In general, KCOV collects coverage information about the current user process. However, in cases similar to NVMe drivers, which use asynchronous work queues, KCOV cannot collect the data, as it doesn’t “know” that the queued work is related to the original task.

Fortunately for us, someone already thought of that and added the solution into the kernel. That said, collecting remote coverage using KCOV requires changes to the sources and recompiling the kernel.

Remote KCOV Kernel Annotations

Despite sounding complex, adding remote coverage annotation is quite simple. All you need to familiarize yourself with is the functions kcov_remote_start(u64 handle) and kcov_remote_stop().

void my_kernel_task(int a) {

kcov_remote_start(REMOTE_HANDLE);

if (a < 10) {

foo();

}

else {

boo();

}

kcov_remote_stop();

}

In the code above, we tell KCOV to start collecting data and associate it with REMOTE_HANDLE.

Looking in syzkaller’s executor/executor_linux.h, and more specifically the function cover_enable(), we see an IOCTL call with the command KCOV_REMOTE_ENABLE. We won’t get much into how it all works, but when executing this IOCTL, the kernel adds the kcov_handle passed into the task_struct of the current process (i.e., current->kcov_handle).

static void cover_enable(cover_t* cov, bool collect_comps, bool extra)

{

unsigned int kcov_mode = collect_comps ? KCOV_TRACE_CMP : KCOV_TRACE_PC;

// The KCOV_ENABLE call should be fatal,

// but in practice ioctl fails with assorted errors (9, 14, 25),

// so we use exitf.

if (!extra) {

if (ioctl(cov->fd, KCOV_ENABLE, kcov_mode))

exitf("cover enable write trace failed, mode=%d", kcov_mode);

return;

}

kcov_remote_arg<1> arg = {

.trace_mode = kcov_mode,

// Coverage buffer size of background threads.

.area_size = kExtraCoverSize,

.num_handles = 1,

};

arg.common_handle = kcov_remote_handle(KCOV_SUBSYSTEM_COMMON, procid + 1);

arg.handles[0] = kcov_remote_handle(KCOV_SUBSYSTEM_USB, procid + 1);

if (ioctl(cov->fd, KCOV_REMOTE_ENABLE, &arg))

exitf("remote cover enable write trace failed");

}

Adding Remote KCOV Support for NVMe-oF/TCP

Now that we know that syzkaller sets the kcov_handle of the input execution process, we need to find a way to pass this handle to the NVMe-oF/TCP driver so that it can start collecting coverage for each input we execute. However, we do not have access to the task_struct that executed the input in the first place (and that’s why we are here 😅).

For that mission, we must briefly examine how the NVMe-oF/TCP work queue receives its messages. It all starts with the queue initialization during the kernel module loading:

static int nvmet_tcp_alloc_queue(struct nvmet_tcp_port *port,

struct socket *newsock)

{

...

INIT_WORK(&queue->io_work, nvmet_tcp_io_work);

...

}

The WORK_INIT tells us that the main function for handling new I/O work is nvmet_tcp_io_work(), which looks like this:

static void nvmet_tcp_io_work(struct work_struct *w)

{

struct nvmet_tcp_queue *queue =

container_of(w, struct nvmet_tcp_queue, io_work);

bool pending;

int ret, ops = 0;

do {

pending = false;

ret = nvmet_tcp_try_recv(queue, NVMET_TCP_RECV_BUDGET, &ops); // <---- Look here if (ret > 0)

pending = true;

else if (ret < 0)

return;

...

}

From there, we go into some wrapper functions until we get to nvmet_tcp_try_recv_one(), which directs the flow of the messages received into the corresponding handling functions.

static int nvmet_tcp_try_recv_one(struct nvmet_tcp_queue *queue)

{

int result = 0;

if (unlikely(queue->rcv_state == NVMET_TCP_RECV_ERR))

return 0;

if (queue->rcv_state == NVMET_TCP_RECV_PDU) {

result = nvmet_tcp_try_recv_pdu(queue);

if (result != 0)

goto done_recv;

}

if (queue->rcv_state == NVMET_TCP_RECV_DATA) {

result = nvmet_tcp_try_recv_data(queue);

if (result != 0)

goto done_recv;

}

if (queue->rcv_state == NVMET_TCP_RECV_DDGST) {

result = nvmet_tcp_try_recv_ddgst(queue);

if (result != 0)

goto done_recv;

}

done_recv:

if (result < 0) {

if (result == -EAGAIN)

return 0;

return result;

}

return 1;

}

We’ll look at the flow that directs to nvmet_tcp_try_recv_pdu().

static int nvmet_tcp_try_recv_pdu(struct nvmet_tcp_queue *queue)

{

struct nvme_tcp_hdr *hdr = &queue->pdu.cmd.hdr;

int len;

struct kvec iov;

struct msghdr msg = { .msg_flags = MSG_DONTWAIT };

recv:

iov.iov_base = (void *)&queue->pdu + queue->offset;

iov.iov_len = queue->left;

len = kernel_recvmsg(queue->sock, &msg, &iov, 1,

iov.iov_len, msg.msg_flags);

...

}

In nvmet_tcp_try_recv_pdu(), the first message-receiving action is for the current work of the work queue. The driver uses kernel_recvmsg() to get the message from the socket. So, to pass a KCOV handle from the initiating process (syzkaller’s input executor) onto the NVMe-oF/TCP driver – to start collecting coverage for the executed syzkaller input – we’ll need to pass it through struct msghdr.

Adding Handles To msghdr

When executing kernel_recvmsg(), the kernel uses the member skb, a struct sk_buff object, of the struct socket that is passed to the function to populate the struct msghdr that was passed as well. The actual message-receiving from the struct sk_buff to the struct msghdr happens in tcp_recvmsg_lock().

For those of you who would like to take a closer look at it, a call stack of such an operation is presented below. Disclaimer: This call stack is only for receiving a TCP message in the same way NVMe-oF/TCP gets its messages.

kernel_msgrecv() –> sock_recv() –> sock_recvmsg_nosec() –> inet_recvmsg() –> tcp_recvmsg() –> tcp_recvmsg_lock()

Fortunately for us, about three years before the writing of this blog post, a commit that adds a KCOV handle field to struct sk_buff was pushed to the mainline (see the commit here [13]). That means every message sent using struct sk_buff (e.g., TCP packets) will have the KCOV handle of the message-sending process in its struct. To access the skb‘s KCOV handle [14], we can use the functions skb_get_kcov_handle() and skb_set_kcov_handle().

Back to tcp_recvmsg_lock(). During this copying operation, I wanted to copy the KCOV handle from the skbuff to the msghdr. To do so, a few changes were required:

- Adding a new kcov_handle to struct msghdr

- Adding getter and setter to struct msghdr.kcov_handle

- Adding a msghdr_set_kcov_handle() call during the message-receiving from skbuff to msghdr

struct msghdr {

void *msg_name; /* ptr to socket address structure */

int msg_namelen; /* size of socket address structure */

...

#ifdef CONFIG_KCOV

u64 kcov_handle;

#endif

};

static inline void msghdr_set_kcov_handle(struct msghdr *msg,

const u64 kcov_handle)

{

#ifdef CONFIG_KCOV

msg->kcov_handle = kcov_handle;

#endif

}

static inline u64 msghdr_get_kcov_handle(struct msghdr *msg)

{

#ifdef CONFIG_KCOV

return msg->kcov_handle;

#else

return 0;

#endif

}

static int tcp_recvmsg_locked(struct sock *sk, struct msghdr *msg, size_t len,

int flags, struct scm_timestamping_internal *tss,

int *cmsg_flags)

{

...

found_ok_skb: // --> #1

/* Ok so how much can we use? */

used = skb->len - offset;

if (len < used) used = len;

#ifdef CONFIG_KCOV

msghdr_set_kcov_handle(msg, skb_get_kcov_handle(skb)); // --> #2

#endif

...

if (!(flags & MSG_TRUNC)) {

err = skb_copy_datagram_msg(skb, offset, msg, used);

if (err) {

/* Exception. Bailout! */

if (!copied)

copied = -EFAULT;

break;

}

}

...

}

In the third code block above, you can see that if an SKB was found (#1) and the receiving part started, there is a new call for msghdr_set_kcov_handle() that will copy the KVOC handle from skb to msg.

Adding KCOV Annotations to NVMe-oF/TCP

To complete the KCOV support, we need to add the annotations to the correct places.

static int nvmet_tcp_try_recv_pdu(struct nvmet_tcp_queue *queue)

{

struct nvme_tcp_hdr *hdr = &queue->pdu.cmd.hdr;

int len;

struct kvec iov;

struct msghdr msg = { .msg_flags = MSG_DONTWAIT };

int kcov_started = 0;

int res;

recv:

iov.iov_base = (void *)&queue->pdu + queue->offset;

iov.iov_len = queue->left;

len = kernel_recvmsg(queue->sock, &msg, &iov, 1,

iov.iov_len, msg.msg_flags); // #1

if (!kcov_started) { // #2

kcov_started = 1; // #2.1

kcov_remote_start_common(msghdr_get_kcov_handle(&msg)); // #2.2

}

if (unlikely(len < 0)) { kcov_remote_stop(); // #3 return len; } queue->offset += len;

queue->left -= len;

if (queue->left) {

kcov_remote_stop(); // #3

return -EAGAIN;

}

if (queue->offset == sizeof(struct nvme_tcp_hdr)) {

u8 hdgst = nvmet_tcp_hdgst_len(queue);

if (unlikely(!nvmet_tcp_pdu_valid(hdr->type))) {

pr_err("unexpected pdu type %d\\n", hdr->type);

nvmet_tcp_fatal_error(queue);

kcov_remote_stop(); // #3

return -EIO;

}

if (unlikely(hdr->hlen != nvmet_tcp_pdu_size(hdr->type))) {

pr_err("pdu %d bad hlen %d\\n", hdr->type, hdr->hlen);

kcov_remote_stop(); // #3

return -EIO;

}

queue->left = hdr->hlen - queue->offset + hdgst;

goto recv; // #2

}

if (queue->hdr_digest &&

nvmet_tcp_verify_hdgst(queue, &queue->pdu, hdr->hlen)) {

nvmet_tcp_fatal_error(queue); /* fatal */

kcov_remote_stop(); // #3

return -EPROTO;

}

if (queue->data_digest &&

nvmet_tcp_check_ddgst(queue, &queue->pdu)) {

nvmet_tcp_fatal_error(queue); /* fatal */

kcov_remote_stop(); // #3

return -EPROTO;

}

res = nvmet_tcp_done_recv_pdu(queue);

kcov_remote_stop(); // #3

return res;

}

1. As previewed above, using kernel_recvmsg(), the msg variable will now contain the KCOV handle of the executed input.

2. This code is executed inside a potential loop (see #4). Thus, we should check whether we have already started coverage collection for this handle or if we should start it now.

2.1. Change the kcov_started flag to 1.

2.2. Of course, we start coverage collection for this handle using kcov_remote_start_common().

3. In case we get into any block that terminates the execution of the receiving message, we stop the coverage collection using kcov_remote_stop().

Running It All

That is it. All that remains is to write the syzkaller manager configuration file, run the fuzzer, and hope for the best.

The configuration I used for the fuzzing is as follows:

{

"target": "linux/amd64",

"http": "0.0.0.0:56742",

"workdir": "/home/user/nvme_docs/syzkaller/workdir",

"kernel_obj": "/home/user/nvme_docs/linux",

"image": "/home/user/nvme_docs/syz_image/bullseye.img",

"sshkey": "/home/user/nvme_docs/syz_image/bullseye.id_rsa",

"syzkaller": "/home/user/nvme_docs/syzkaller",

"procs": 1,

"sandbox": "none",

"type": "qemu",

"vm": {

"count": 9,

"kernel": "/home/user/nvme_docs/linux/arch/x86/boot/bzImage",

"cpu": 1,

"mem": 2048,

"qemu_args": "-device nvme,drive=nvm,serial=deadbeef -drive file=/home/user/nvme_docs/syz_image/nvme.img,if=none,id=nvm,format=raw -enable-kvm -cpu host,migratable=off"

},

"enable_syscalls": [

"syz_socket_connect_nvme_tcp",

"sendto$inet_nvme_*",

"close",

"recvmsg$inet_nvme",

"recvfrom$inet_nvme"

]

}

Lastly, run it using:

sudo ./syzkaller/bin/syz-manager -config=nvme_socket.cfg

Findings (Table)

Here are all the bugs and vulnerabilities this fuzzer has found so far.

| Bug | Fix / Discussion | Other Notes |

| KASAN: slab-use-after-free Read in process_one_work | Openwall Report | CVE-2023-5178 |

| KASAN: slab-out-of-bounds Read in nvmet_ctrl_find_get | Discussion | CVE-2023-6121 |

| BUG: unable to handle kernel NULL pointer dereference in __nvmet_req_complete | Discussion | CVE-2023-6536 |

| BUG: unable to handle kernel NULL pointer dereference in nvmet_tcp_build_pdu_iovec | Discussion | CVE-2023-6356 |

| BUG: unable to handle kernel NULL pointer dereference in nvmet_tcp_io_work | Discussion | CVE-2023-6535 |

What’s Next

Looking at the coverage map, Syzkaller provides and the areas in which the fuzzer found the bugs, I noticed that only some parts were covered and fuzzed. That is due to the functions we modified using remote KCOV. I’m sure that by using remote KCOV in more subsystem areas, syzkaller will be able to cover more ground and find other bugs and vulnerabilities.

Moreover, it is also a great practice to check the coverage and see if some modifications to the subsystem description will help syzkaller reduce the redundancy or cover more code.

Closing Notes

Using Syzkaller, which can easily be extended to additional subsystems, we found and reported some bugs and vulnerabilities in the NVMe-oF/TCP driver for Linux.

As a side note, I would like to give a shout-out to similar and incredibly good research that has been made, in parallel to this research, on fuzzing the ksmbd subsystem of the Linux kernel [15], which also exposes the INET socket from the kernel to the outside world. I strongly encourage you to read it.

References

- NVMe: New Vulnerabilities Made Easy, CyberArk Blog – https://www.cyberark.com/resources/threat-research-blog/nvme-new-vulnerabilities-made-easy

- Syzkaller official GitHub repository – https://github.com/google/syzkaller

- Fuzzing, OWASP.org – https://owasp.org/www-community/

- Coverage Guided vs Blackbox Fuzzing – https://google.github.io/clusterfuzz/reference/coverage-guided-vs-blackbox/

- Syzkaller’s coverage documentation – https://github.com/google/syzkaller/blob/master/docs/coverage.md

- How to build a fuzzing corpus – https://blog.isosceles.com/how-to-build-a-corpus-for-fuzzing/

- open(2) syscall, Linux v6.5 – https://elixir.bootlin.com/linux/v6.5/source/fs/open.c#L1426

- Syzkaller’s syscall description language – https://github.com/google/syzkaller/blob/master/docs/syscall_descriptions_syntax.md

- NVMe Specifications – https://nvmexpress.org/wp-content/uploads/NVM-Express-TCP-Transport-Specification-2021.06.02-Ratified.pdf

- Linux Namespaces(7) manpage – https://man7.org/linux/man-pages/man7/namespaces.7.html

- Syzkaller’s pseudo-syscalls documentation – https://github.com/google/syzkaller/blob/master/docs/pseudo_syscalls.md

- KCOV, kernel.org – https://www.kernel.org/doc/html/latest/dev-tools/kcov.html

- KCOV addition to sk_buff, the Linux repository on github – https://github.com/torvalds/linux/commit/fa69ee5aa48b5b52e8028c2eb486906e9998d081

- `skb_set_kcov_handle` – https://elixir.bootlin.com/linux/v6.5/source/include/linux/skbuff.h#L5016

- Tickling ksmbd: fuzzing SMB in the Linux kernel – https://pwning.tech/ksmbd-syzkaller/

Alon Zahavi is a CyberArk Labs Vulnerability Researcher.