Several years ago, when I spoke with people about containers, most of them were not familiar with the term. Today, it is unquestionably one of the most popular technologies being used in DevOps systems. Containers provide several advantages such as reliability, more consistent operation, greater efficiency and more. Kubernetes also contributed to its popularity. As it became so popular, it was mostly on Linux systems.

In 2015, Microsoft introduced Windows containers after working with Docker. Although the technology has been available for a while, it is still less popular than the classic Linux containers, and there has been very little written about it, especially from a security perspective. But in 2019, I read the article by Daniel Prizmant about reversing Windows containers, which was a great start for me in that field of research. He also published three more articles about a unique technique called “Siloescape” to break out from the container to the host.

After that, in April 2021, James Forshaw published the article “Who Contains the Containers?” and showed a couple of vulnerabilities he found that, when chained together, provide a full breakout from the container to the host.

I decided to join the party and do my own research to contribute to this area.

TL;DR, in this blog post, you will learn in-depth details about Windows containers and the communications between the host and the container in Windows OS. I found it interesting, and I hope you do, too.

You are not going to see new vulnerabilities here, but you will see our new open-source GUI tool, “RPCMon,” which can monitor RPC calls and show their function names. This tool has many other capabilities, like filtering rows, highlighting them, and more. We believe it will improve your RPC research and make it easier to see what happens in a high-level view.

Containers in Windows

Windows containers were created to run Windows and Linux applications with all their dependencies inside the Windows operating system. Microsoft needed to create new components to handle it. There are two supported types: Hyper-V Isolated containers and Windows Server containers.

Hyper-V Isolated Containers

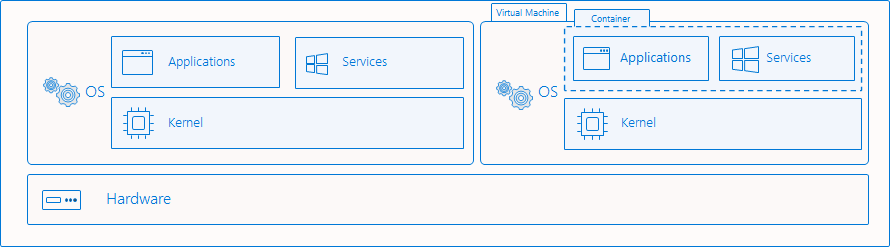

In this method (Figure 1), the container is running inside a fully isolated Hyper-V virtual machine. This method has two sub-models: Linux containers in a Moby VM and Linux containers on Windows (LCOW).

Figure 1- Hyper-V Isolated Containers Architecture (taken from Microsoft)

To use it through Docker, you need to specify –isolation hyperv like that:

docker run -it --isolation hyperv mcr.microsoft.com/windows/nanoserver:1809 cmd.exe

1. Linux Containers in a Moby VM

In this method, we have a full Hyper-V virtual machine that runs the Linux containers. In the Windows container host, Docker is running and calls the Docker Daemon on the Linux virtual machine.

2. Linux Containers with WSL 2

This method was called “Linux Containers on Windows (LCOW),” but now it is deprecated and has been replaced with WSL 2 (Windows Subsystem for Linux).

In this method, similar to the previous method, the Linux version of the Docker daemon will run inside WSL2 rather than a regular Hyper-V virtual machine.

Windows Server Containers (“Process Isolation”)

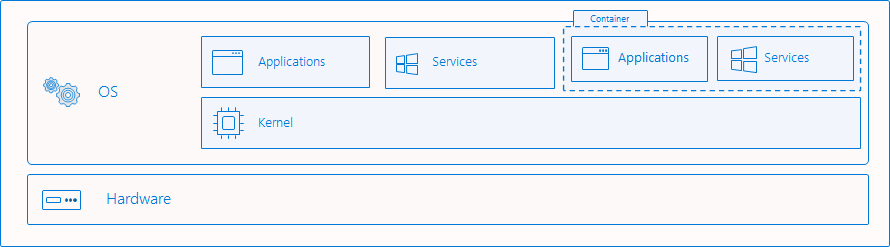

In this method (Figure 2), also known as “Process Isolation” or “Windows Process Container,” the kernel is shared with the host and the container works as a single job. It uses a Silo object, which is based on the job objects in Windows but with additional rules and capabilities, a kind of super-job. There are two types of Silo objects: a server silo, which is used for the Docker container support and requires administrator privileges, and an application silo that is used to implement the desktop bridge.

Currently, this method isolation can be enabled only on Windows Servers, but it should also be available from Windows 11.

Figure 2 – Process Isolation Architecture (taken from Microsoft)

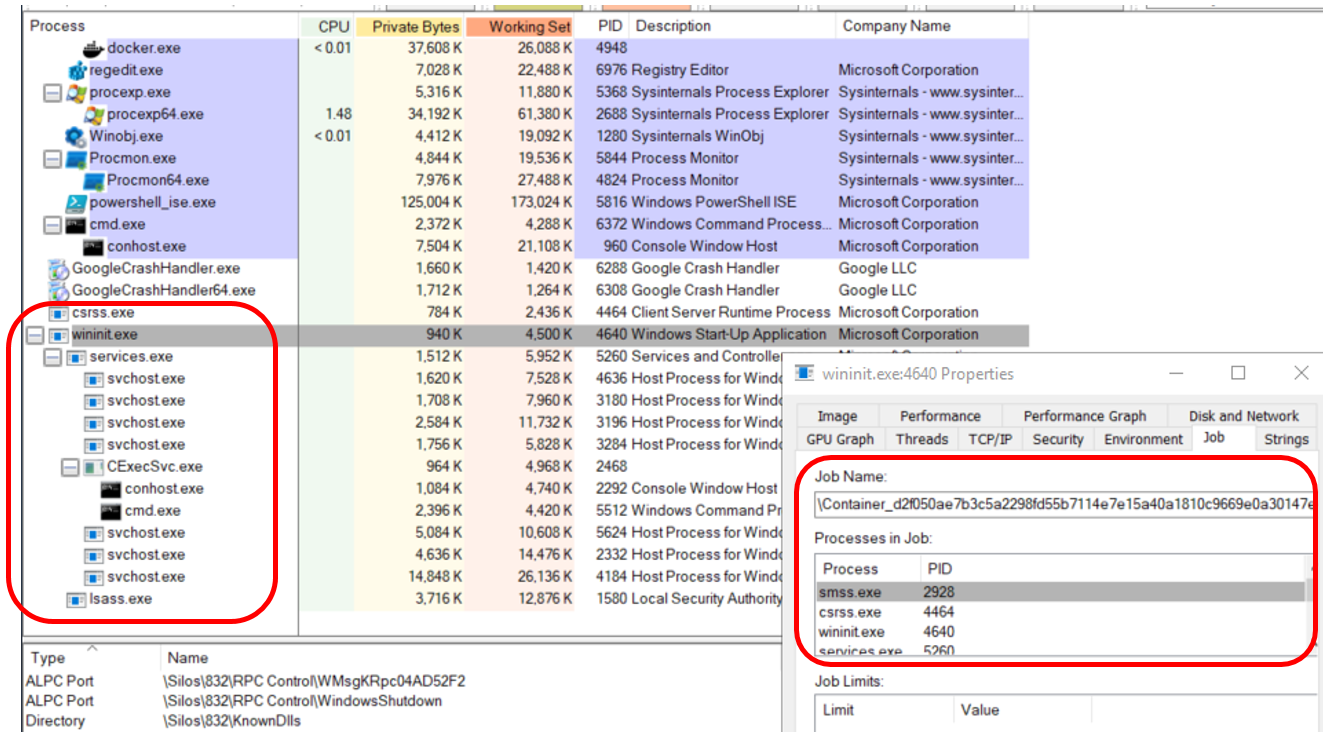

This is the method I used for this research, and here is an example of how it looks through Process Explorer (Figure 3).

Figure 3 – Container Processes Through Process Explorer

All the marked processes are under one Job (Silo object).

To use it through Docker, you need to specify –isolation process like that:

docker run -it --isolation process mcr.microsoft.com/windows/nanoserver:1809 cmd.exe

After understanding the basics, it is time to continue.

Choosing an Attack Vector

When I started to work with Windows containers, I noticed there is a lot going on behind the scenes, and I needed to map the attack vectors and decide where I want to take them. The main goal is to break out from the container to the host. Another goal is to think about how I can use the Windows containers components to access containers or escalate my privileges.

I decided to look at the ways I can break out from the container to the host. Here are two ideas I had:

- Take control of the host from within the container, take control of the communication between the Docker Daemon to the container.

- Analyze the kernel functions that check for Silo object (like James Forshaw did).

I decided to go with the first one, because this method offered a better chance to learn more about how things work between the Docker Daemon to the container. For example, when someone is running docker exec from outside the container, what happens behind the scenes? The first step was to learn about the architecture, which can expose more attack vectors as you get a better understanding of how it works.

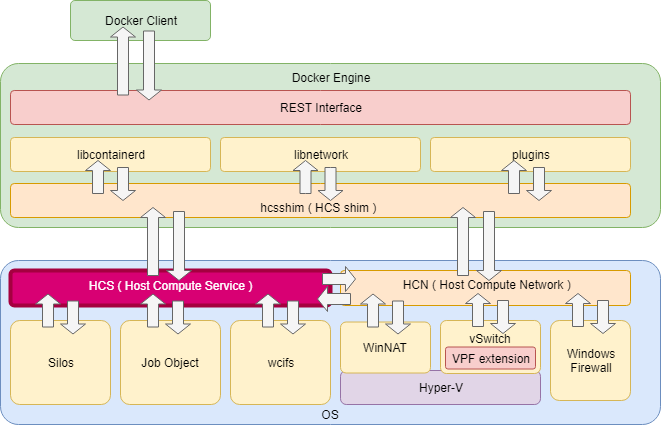

Windows Container Architecture

After installing Docker Desktop on Windows, we will have the docker client (“docker.exe”) and docker daemon\engine (“dockerd.exe”, an open-source). Every command executed by the docker client will be translated to REST API and continue to the docker daemon. The docker daemon then moves it through one of its components (libcontainerd, libnetwork or plugins) to the HCS Shim (hcsshim). This is an open-source by Microsoft that was created as an interface for using the Windows Host Compute Service (HCS), Windows service named vmcompute.exe (Hyper-V Host Compute Service), to launch and manage Windows containers (Figure 4). It also contains other helpers to communicate with the Host Compute Network (HCN).

Figure 4 – Windows Containers Architecture (taken from this blog)

After the request received by the HCS, the HCS will eventually execute the command. Let’s look at the simple example of creating a new container in Windows.

Creating a New Windows Container

When running a new container through the docker client, the command will eventually end in the HCS.

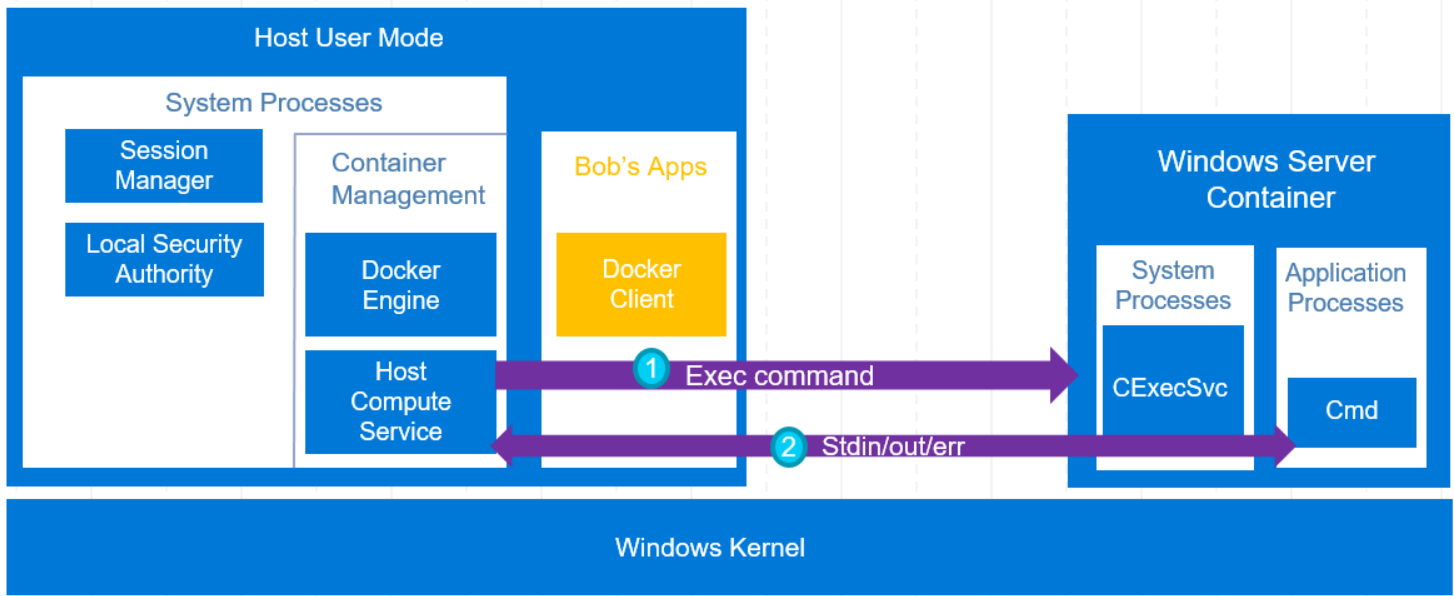

The HCS will create a new Silo object with all the required components. Inside the container (Silo object), we will have the default system windows processes (Figure 2). One special process is CExecSvc, which is a service inside the container that implements the container execution service. After this service is running, the HCS will communicate with it (Figure 5, No. 1) and then set up a stdin\stdout pipe (Figure 5, No. 2).

Figure 5 – Execute Command to Windows Container (taken from DockerCon Lecture)

Now that we have a good knowledge of how things work at the high level, it’s time to drill down and understand how the communication works between the HCS to CExecSvc.

HCS and RPC Communication

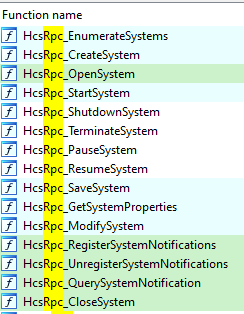

There are number of options when it comes to inter-process Communications (IPC) in Windows. The most common are RPC, named pipes, and the COM object. From a brief look at the HCS’s code, it seems the HCS is using remote procedure calls (RPC) (Figure 6). RPC is a technology to enable data communication between a client and a server across process and machine boundaries (network communication). If you are not too familiar with RPC communication, I recommend reading this great article that explains everything you need to know about RPC in Windows.

Figure 6 – vmcompute.exe RPC functions from IDA

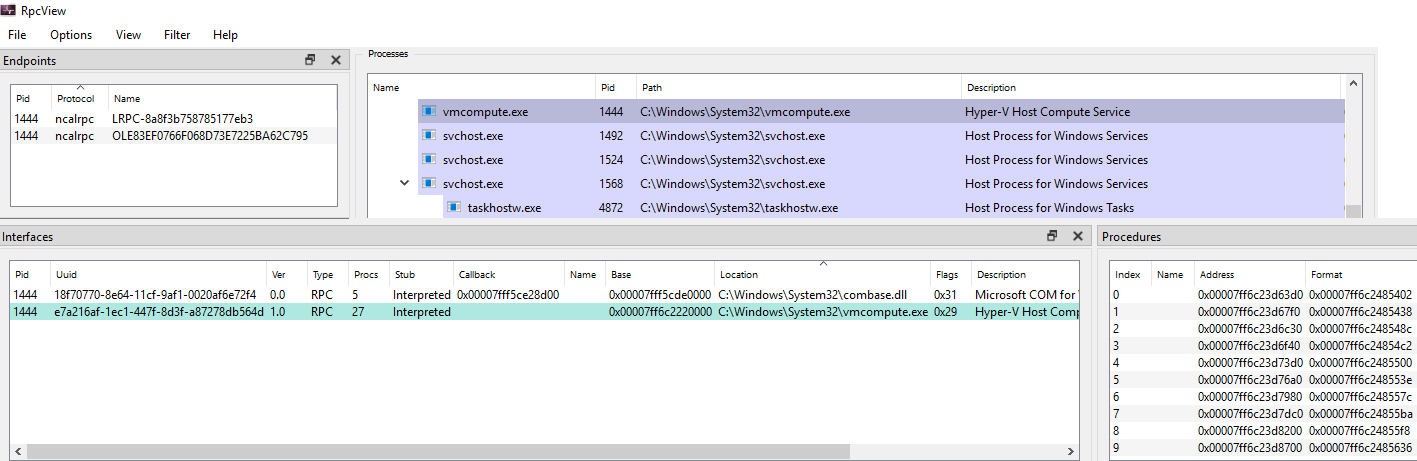

I wanted to verify it, so I used RpcView with symbols. Although I could see most of the functions in other processes, it didn’t show me the function names of the HCS RPC server (Figure 7). But I could understand that HCS has an RPC server that uses the Advanced Local Procedure Call (ALPC) protocol for the RPC runtime. The protocol can be identified in the Endpoints window by ncalrpc which stands for Network Computing Architecture Local Remote Procedure Call.

Figure 7 – VMcompute details in RPCView

I searched for other tools and noticed that James Forshaw mentioned in this article that he also tried RpcView and it wasn’t enough for his purposes. This is why I decided to use the new RPC implementation he developed in NtObjectManager (you can read the previous article or watch his lecture about it).

Analyzing HCS (vmcompute.exe)

I used James Forshaw’s RPC implementation from NtObjectManager tools to extract the RPC information:

PS> Install-Module -Name NtObjectManager

PS> $rpc = Get-RpcServer C:\Windows\System32\vmcompute.exe -DbgHelpPath "C:\Program Files (x86)\Windows Kits\10\Debuggers\x64\dbghelp.dll"

PS> $rpc

Name UUID Ver Procs EPs Service Running

---- ---- --- ----- --- ------- -------

vmcompute.exe e7a216af-1ec1-447f-8d3f-a87278db564d 1.0 27 1 vmcompute True

PS> $rpc | fl

InterfaceId : e7a216af-1ec1-447f-8d3f-a87278db564d

InterfaceVersion : 1.0

TransferSyntaxId : 8a885d04-1ceb-11c9-9fe8-08002b104860

TransferSyntaxVersion : 2.0

ProcedureCount : 27

Procedures : {Proc0, Proc1, Proc2, Proc3...}

Server : UUID: e7a216af-1ec1-447f-8d3f-a87278db564d

ComplexTypes : {Struct_0}

FilePath : C:\Windows\System32\vmcompute.exe

Name : vmcompute.exe

Offset : 2361536

ServiceName : vmcompute

ServiceDisplayName : Hyper-V Host Compute Service

IsServiceRunning : True

Endpoints : {[e7a216af-1ec1-447f-8d3f-a87278db564d, 1.0] ncalrpc:[LRPC-e68ba07606d37d98d8]}

EndpointCount : 1

Client : False

We received the RPC server information of HCS. We can also view the RPC server functions:

PS > $rpc.Procedures | select Name Name ---- HcsRpc_EnumerateSystems HcsRpc_CreateSystem HcsRpc_OpenSystem HcsRpc_StartSystem HcsRpc_ShutdownSystem HcsRpc_TerminateSystem HcsRpc_PauseSystem HcsRpc_ResumeSystem HcsRpc_SaveSystem HcsRpc_GetSystemProperties HcsRpc_ModifySystem HcsRpc_RegisterSystemNotifications HcsRpc_UnregisterSystemNotifications HcsRpc_QuerySystemNotification HcsRpc_CloseSystem HcsRpc_CreateProcess HcsRpc_OpenProcess HcsRpc_SignalProcess HcsRpc_GetProcessInfo HcsRpc_GetProcessProperties HcsRpc_ModifyProcess HcsRpc_RegisterProcessNotifications HcsRpc_UnregisterProcessNotifications HcsRpc_QueryProcessNotification HcsRpc_CloseProcess HcsRpc_GetServiceProperties HcsRpc_ModifyServiceSettings

This information can be helpful in understanding the whole process, from the docker client to CExecSvc because the HCS exposes these functions in the host. After the docker client communicates with the docker daemon (dockerd.exe), the docker daemon calls to one of the above-exposed functions. Once the HCS RPC server received a call from the docker daemon, it communicates with CExecSvc through RPC functions that CExecSvc expose.

Before we continue, notice that the processes inside the container can’t see the HCS RPC server functions, and they don’t know that such a service even exists because it runs inside the host. Therefore, from within the container, we can’t use them. But this is where the CExecSvc functions come into play.

Analyzing CExecSvc

There is not much information about CExecSvc, but you can read about it in the last Windows Internals book 7, part 1 (page 190):

“This service uses a named pipe to communicate with the Docker and Vmcompute services on the host, and is used to launch the actual containerized applications in the session. It is also used to emulate the console functionality that is normally provided by Conhost.exe, piping the input and output through the named pipe to the actual command prompt (or PowerShell) window that was used in the first place to execute the docker command on the host. This service is also used when using commands such as docker cp to transfer files from or to the container.”

I used the same tools on this service, analyzing it from the host:

PS > $rpc = Get-RpcServer C:\Windows\System32\CExecSvc.exe -DbgHelpPath "C:\Program Files (x86)\Windows Kits\10\Debuggers\x64\dbghelp.dll"

PS > $rpc

Name UUID Ver Procs EPs Service Running

---- ---- --- ----- --- ------- -------

CExecSvc.exe 75ef42c7-22f4-44a0-8200-9351cd316e01 1.0 4 0 False

PS C:\Users\Administrator> $rpc | fl

InterfaceId : 75ef42c7-22f4-44a0-8200-9351cd316e01

InterfaceVersion : 1.0

TransferSyntaxId : 8a885d04-1ceb-11c9-9fe8-08002b104860

TransferSyntaxVersion : 2.0

ProcedureCount : 4

Procedures : {CExecCreateProcess, CExecResizeConsole, CExecSignalProcess, CExecShutdownSystem}

Server : UUID: 75ef42c7-22f4-44a0-8200-9351cd316e01

ComplexTypes : {}

FilePath : C:\tmp\CExecSvc.exe

Name : CExecSvc.exe

Offset : 138752

ServiceName :

ServiceDisplayName :

IsServiceRunning : False

Endpoints : {}

EndpointCount : 0

Client : False

PS C:\Users\Administrator> $rpc.Procedures | select Name

Name

----

CExecCreateProcess

CExecResizeConsole

CExecSignalProcess

CExecShutdownSystem

We see it has four functions, and from their names, you can understand what they are doing. At this point, we know that these two services, HCS and CExecSvc, have their own RPC server that expose functions. Let’s understand how they work together.

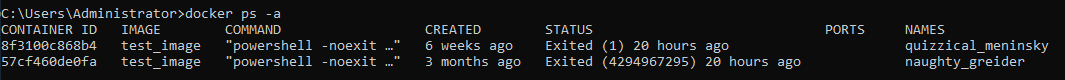

The Process of Executing a Shell in a Windows Container

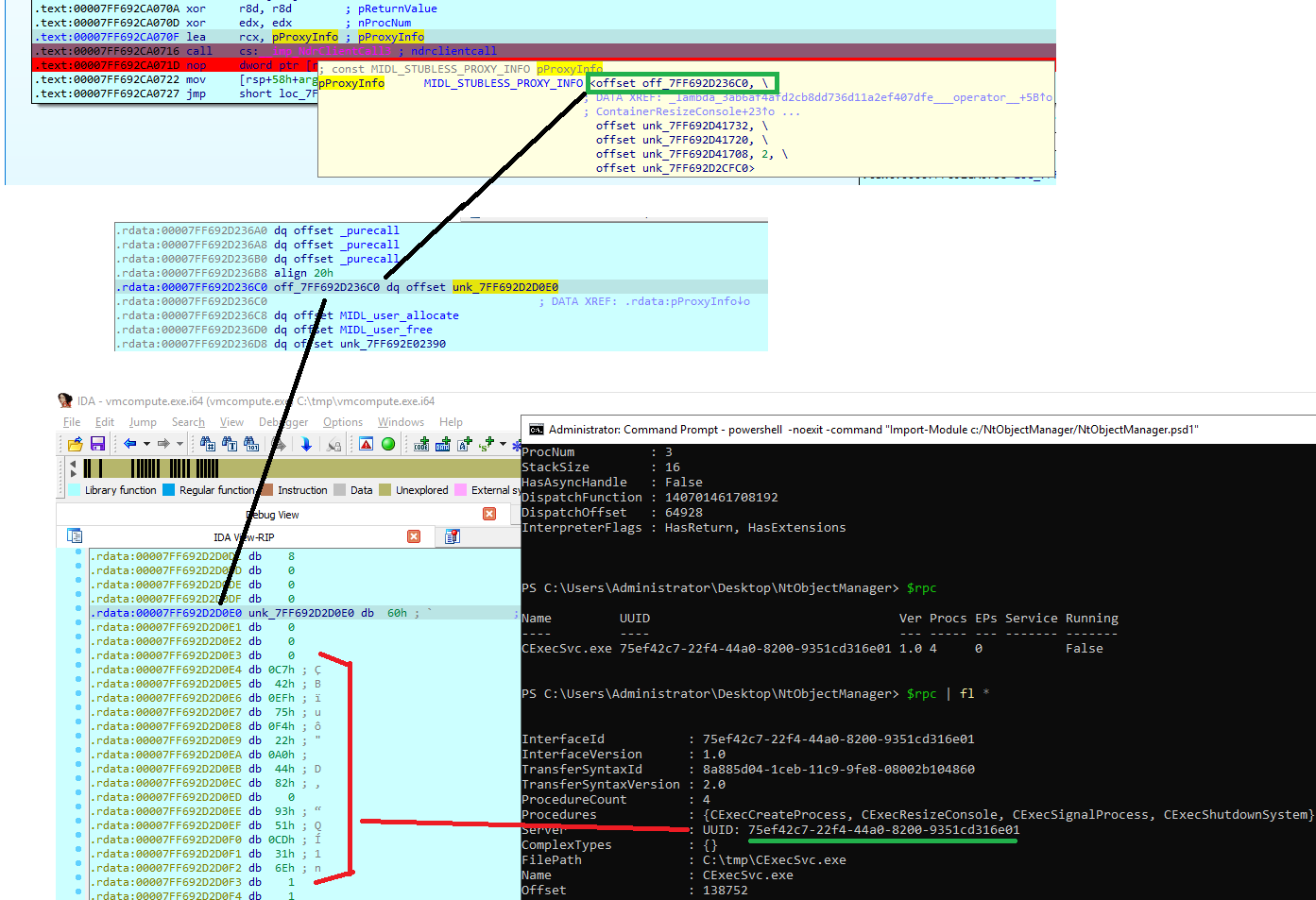

I attached vmcompute (HCS) and CExecSvc to IDA in order to debug the process. After running docker exec -it <container_id> cmd We will get a shell, but behind the scenes, it first breaks on HCS on HcsRpc_CreateProcess and continues all the way down to the RPC function NdrClientCall3:

HcsRpc_CreateProcess -> ComputeService::Management::ContainerProcessOrchestrator::ExecuteProcess -> ContainerStartProcess -> _lambda_3ab6af4afd2cb8dd736d11a2ef407dfe___operator__ -> imp_NdrClientCall3

The NdrClientCall3 function, based on Microsoft documentation, will receive the procedure number (nProcNum), which in our case is 0 ( CExecCreateProcess), and the information about the RPC ( pProxyInfo, see Figure 8).

Figure 8 – View of pProxyInfo from IDA

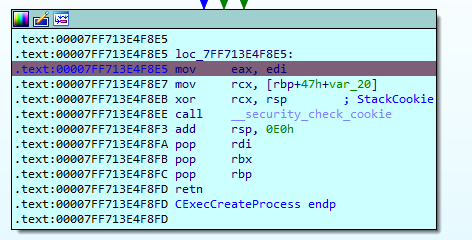

After calling imp_NdrClientCall3, the HCS is waiting for CExecSvc to respond. Inside CExecSvc, it breaks on CExecCreateProcess. It passes the information to the large function named CExec::Svc::Details::ExecuteProcess, impersonates the logged-on user to the container, and creates the required process, in our case cmd.exe:

CExecCreateProcess -> CExec::Svc::Details::ExecuteProcess -> ImpersonateLoggedOnUser -> CreateProcessAsUserW ->

The result is returned in eax (Figure 9).

Figure 9 – EAX return value in CExecCreateProcess

Success is when eax equals zero. After that, we go back to the HCS, where the result is returned by the

NdrClientCall3 function and continues back to the docker daemon.

One interesting thing about CExecSvc is that if you stop this service from within the container, the container will still be running, but no one will be able to get a shell inside. They will get this kind of error:

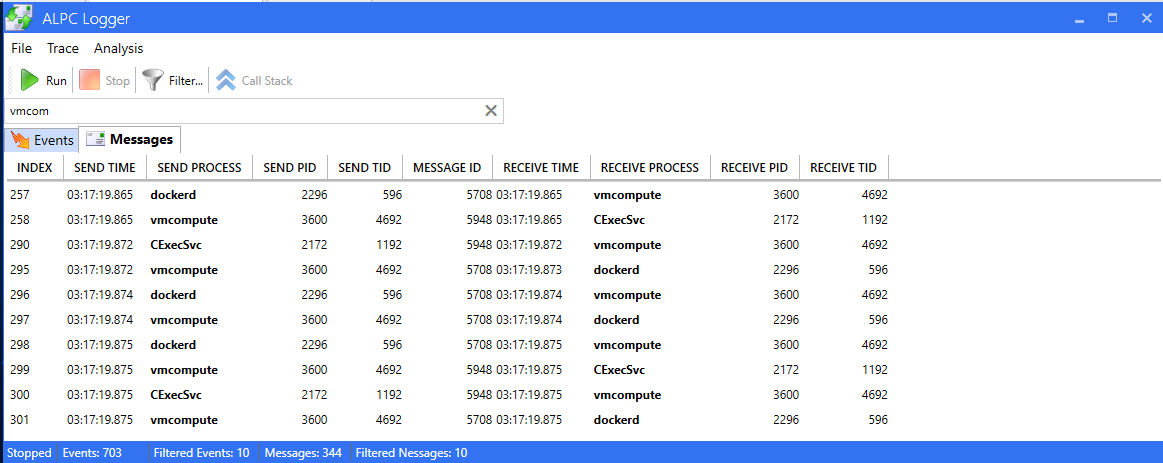

C:\Users\Administrator>docker exec -it 57c cmd container 57cf460de0fa5d14b42f2b98150bdc076efc082a84168aa0633d7a66562ec999 encountered an error during hcsshim::System::CreateProcess: failure in a Windows system call: The RPC server is unavailable. (0x6ba)

But you can always start it again and the container will be accessible. One idea was to create a fake CExecSvc or just inject some custom code to control the return value, but before going there, I wanted to check if it was going to be worth it.

I debugged this service and tried to return different error codes (Figure 10) instead of zero (success), but I didn’t find an interesting behavior, so I didn’t continue with this vector.

Figure 10 – Container with modified exit value

In the couple of times that I played with the return value, I observed a strange behavior. It happened randomly, I couldn’t start any container. Even when I tried to restart the service, nothing worked. I couldn’t reproduce it directly, but I did notice it usually happened after playing with the return value, pausing the virtual machine, and the day after starting it again. Maybe it is related to the use of VMWare.

The error message I got:

C:\Users\Administrator\Desktop\docker>dockerd -l error time="2021-11-30T02:25:20.475638600-08:00" level=error msg="failed to start container" error="container 8f3100c868b443db8b78777dfcd67d13c451bf41dfd11a2eb2a36509b793749d encountered an error during hcsshim::System::Start: failure in a Windows system call: The virtual machine or container exited unexpectedly. (0xc0370106)" module=libcontainerd namespace=moby time="2021-11-30T02:25:20.478041100-08:00" level=error msg="failed to cleanup after a failed Start" error="container 8f3100c868b443db8b78777dfcd67d13c451bf41dfd11a2eb2a36509b793749d encountered an error during hcsshim::System::waitBackground: failure in a Windows system call: The virtual machine or container exited unexpectedly. (0xc0370106)" module=libcontainerd namespace=moby time="2021-11-30T02:25:20.985650400-08:00" level=error msg="8f3100c868b443db8b78777dfcd67d13c451bf41dfd11a2eb2a36509b793749d cleanup: failed to delete container from containerd: no such container" time="2021-11-30T02:25:20.990157800-08:00" level=error msg="Handler for POST /v1.41/containers/8f3/start returned error: container 8f3100c868b443db8b78777dfcd67d13c451bf41dfd11a2eb2a36509b793749d encountered an error during hcsshim::System::Start: failure in a Windows system call: The virtual machine or container exited unexpectedly. (0xc0370106)"

To sum it up, I created a flow chart of the process in Figure 11.

Figure 11 – Flow of docker executing a shell in a container

ALPC Monitoring

We now know how the process of getting a shell inside a container works, but it required me to reverse it and understand what RPC functions are being executed when someone is using, for example, docker exec. I searched for tools, something like Procmon but for RPC — a tool that shows all the calls and functions. RPCView, for example, wasn’t enough for me because it doesn’t monitor the functions calls; it only shows what functions each RPC server has.

A new tool presented at BlackHat Europe 2021 by Sagi Dulce from ZeroNetwork called “rpcfirewall” was an interesting candidate because it wrote to the event viewer, but required you to give it the PID of the process you want to monitor and then injected it with a DLL that hooks the NdrClientCall. With protected processes, it was more complicated, and eventually, it didn’t export the function name. Hooking was an option, but I wanted something that could catch all the processes without the need to inject them with code.

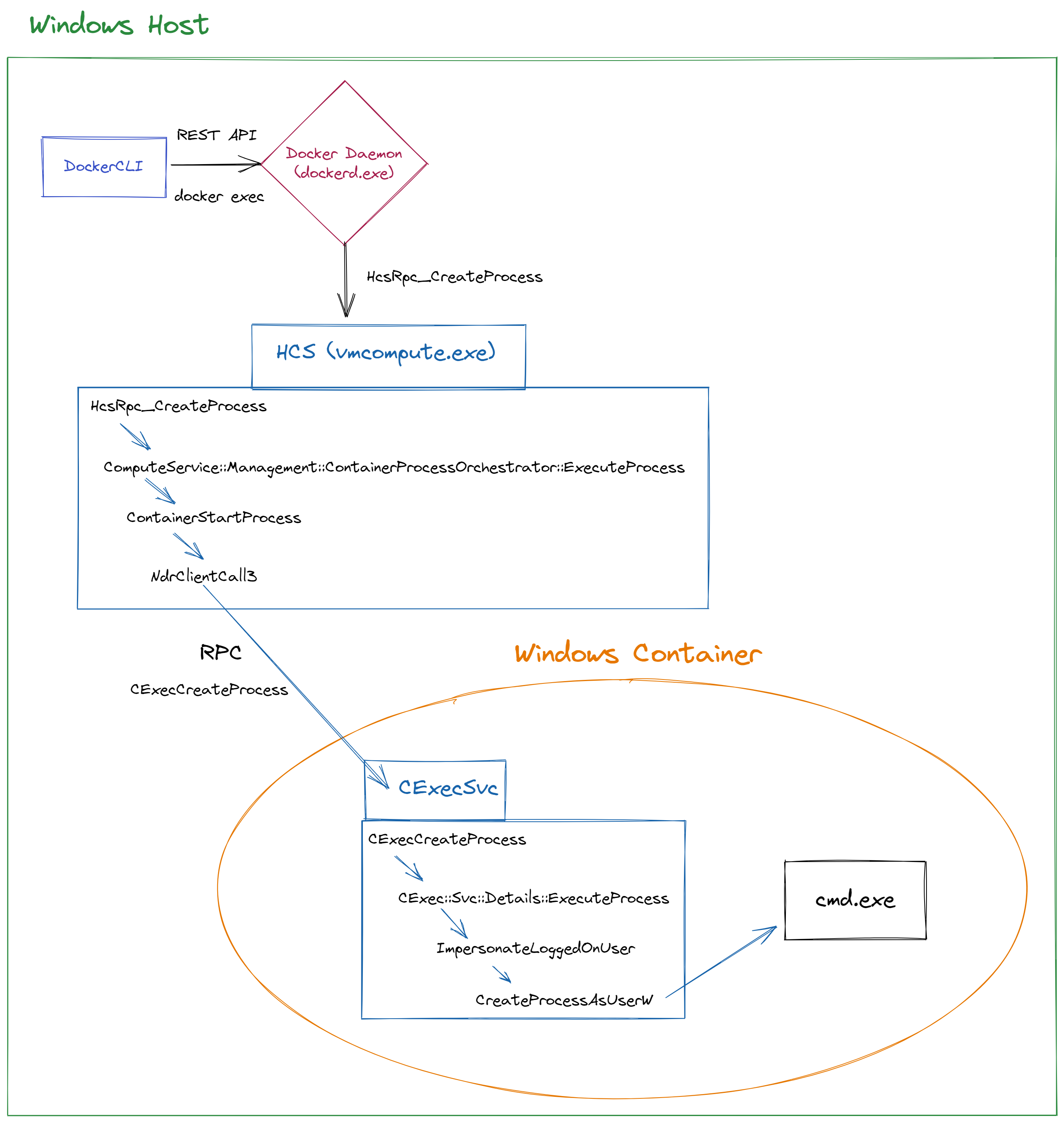

A less common tool that I found was ALPC Logger that was very close to what I searched for. I tested it on the docker exec command:

Figure 12 – ALPC Logger

You can see in the figure above (Figure 12) how docker daemon (dockerd) sends a message to HCS (vmcompute), and then HCS sends a message to CExecSvc. Then, in the next line (index 290) you can see how CExecSvc returns the result to HCS and so on. Great! But what about the function names? They aren’t shown and this is something that could help understand what happens in high resolution.

In RPC, when the client calls a function, this function is being identified by Opnum which according to Microsoft MS-RPCE is:

“An operation number or numeric identifier that is used to identify a specific remote procedure call (RPC) method or a method in an interface.”

The only thing I needed to see was how I can filter these Opnums and then use James’ tools to extract the function name. This is when my friend Ido Hoorvitch introduced me to logman, a tool by Microsoft that can create and manage Event Trace Session.

Using Logman to Trace RPC Events

In the beginning, I used the following commands:

logman start RPC_ONLY -p Microsoft-Windows-RPC -ets logman start RPC_EVENTS -p Microsoft-Windows-RPC-Events -ets logman start RCPSS_ONLY -p Microsoft-Windows-RPCSS -ets

This tells Windows to start to listen to RPC events. The problem with these commands is that they generate tons of information, most of which wasn’t relevant to me, so I used its filters, and this was the final result:

# Start listenining to RPC events

logman start RPC_ONLY -p Microsoft-Windows-RPC "Microsoft-Windows-RPC/EEInfo,Microsoft-Windows-RPC/Debug" win:Informational -ets

logman start RPC_EVENTS -p Microsoft-Windows-RPC-Events -ets

logman start RCPSS_ONLY -p Microsoft-Windows-RPCSS "EpmapDebug,EpmapInterfaceRegister,EpmapInterfaceUnregister" win:Informational -ets

# Build event files from the trace file (".etl")

tracerpt RPC_ONLY.etl -o RPC_ONLY.evtx -of EVTX

tracerpt RPC_EVENTS.etl -o RPC_EVENTS.evtx -of EVTX

tracerpt RCPSS_ONLY.etl -o RCPSS_ONLY.evtx -of EVTX

# Stop listenining to RPC events

logman stop RPC_ONLY -ets

logman stop RPC_EVENTS -ets

logman stop RCPSS_ONLY -ets

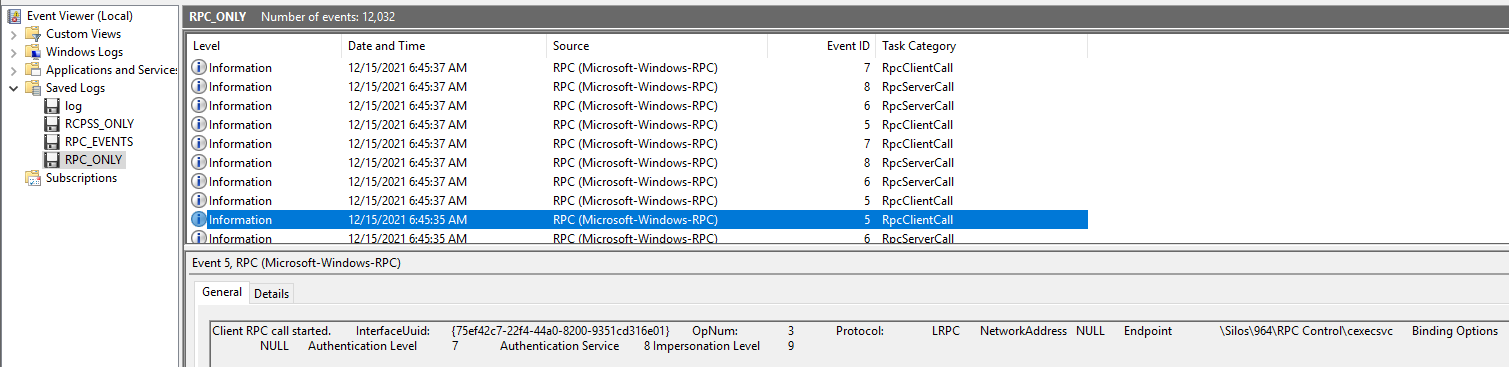

Eventually, the only interesting file was RPC_ONLY.evtx. For example, you can see in the figure below (Figure 13) a call to the UUID of CExecSvc. We also have the OpNum 3, which is the last function (CExecShutdownSystem) in the array of the CExecSvc procedures we saw earlier.

Figure 13 – RPC Event Viewer

That’s it, we have everything we need to build an RPC monitor tool.

RPCMon – RPC Monitor Tool

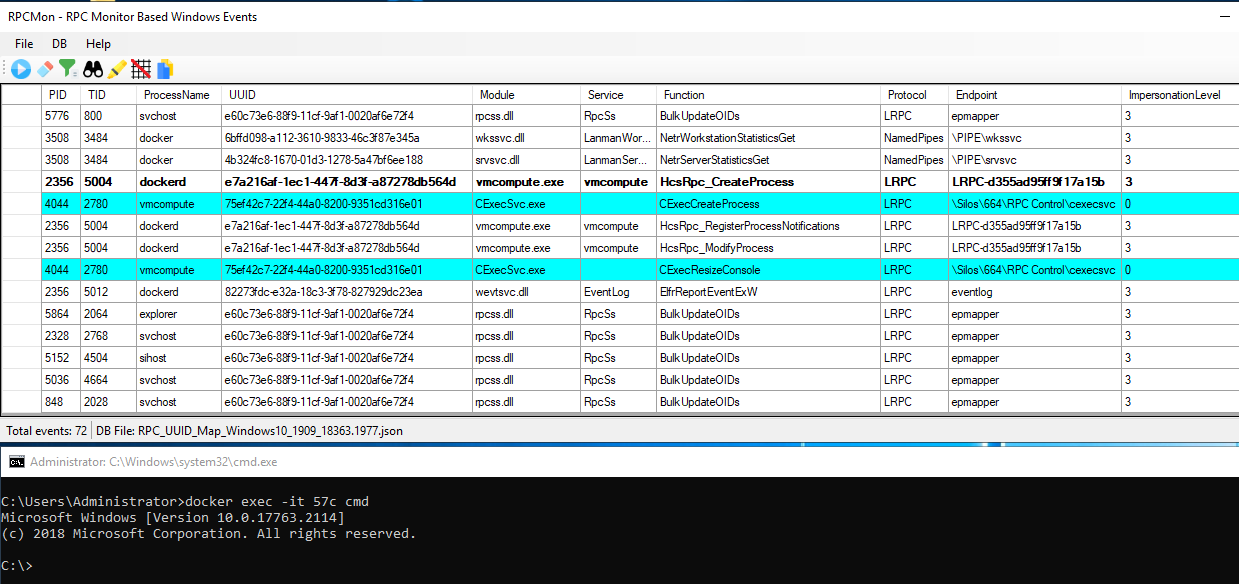

Before I started to work on the tool, I double-checked to see if there is something similar to it. To my surprise, I found one that was exactly what I needed, but the UUID database wasn’t updated to my system and I still wanted a Procmon-like tool. We built RPCMon, which is an RPC monitor GUI tool based on Event Tracing for Windows (ETW). Here is an example of how getting a shell to a container looks like using the new tool (Figure 14):

Figure 14 – RPCMon monitor

It currently shows the RPC client calls. We can see how dockerd calls HcsRpc_CreateProcess, which triggers vmcompute to call CExecCreateProcess that eventually creates the cmd.exe inside the container.

The tool has similar options to Procmon: You can bold rows, search, highlight, filter events based on column, etc. We also added an option to build DB in case the RPC database does not cover all the events:

Figure 15 – RPCMon monitor

We have other ideas for features to add in the future. This tool can be used for the research of other RPC calls, not only for Windows containers.

Calling HcsRpc_ShutdownSystem from a Low Privileged User?

Back to our attack vector. I couldn’t find a way to use CExecSvc to run code on the host, so I tried a different direction. I wanted to check and see if I could call the vmcompute functions from a low privileged user in the host. If I am able to do it, I will access the containers and maybe even escalate my privileges. To make a long story short, it didn’t work — but I do want to share my research process around it.

The first step was to get the RPC server of vmcompute and then build a client with Forshaw tools:

PS > $a = Get-RpcServer "C:\windows\system32\vmcompute.exe" -DbgHelpPath "C:\Program Files (x86)\Windows Kits\10\Debuggers\x64\dbghelp.dll" PS > $a.Endpoints UUID Version Protocol Endpoint Annotation ---- ------- -------- -------- ---------- e7a216af-1ec1-447f-8d3f-a87278db564d 1.0 ncalrpc LRPC-32740fb58a6edf506f PS > $c = Get-RpcClient $a PS > Connect-RpcClient $c -EndpointPath "LRPC-32740fb58a6edf506f"

After the RPC client was connected, I checked the procedures. Obviously, the most interesting one is HcsRpc_CreateProcess,

but it takes many arguments, and I thought it could be too complex to try it for the first time. I first wanted to see if I had permission to call the RPC functions. The HcsRpc_ShutdownSystem looked like a good candidate:

PS C:\Users\Administrator> $a.Procedures[4]

Name : HcsRpc_ShutdownSystem

Params : {FC_SUPPLEMENT - NdrSupplementTypeReference - IsIn, , FC_UP - NdrPointerTypeReference - MustSize, MustFree, IsIn, FC_HYPER -

NdrSimpleTypeReference - IsIn, IsBasetype...}

ReturnValue : FC_LONG - NdrSimpleTypeReference - IsOut, IsReturn, IsBasetype

Handle : FC_BIND_CONTEXT - NdrSimpleTypeReference - 0

RpcFlags : 0

ProcNum : 4

StackSize : 40

HasAsyncHandle : False

DispatchFunction : 140697705411536

DispatchOffset : 1799120

InterpreterFlags : ServerMustSize, ClientMustSize, HasReturn, HasExtensions

Although it had five parameters, only three of them were input arguments (removed some rows):

PS C:\Users\Administrator> $a.Procedures[4].Params Attributes : IsIn Type : FC_SUPPLEMENT - NdrSupplementTypeReference ... Name : p0 IsIn : True Attributes : 0 Type : FC_BIND_CONTEXT - NdrSimpleTypeReference ... Name : IsIn : False Attributes : MustSize, MustFree, IsIn Type : FC_UP - NdrPointerTypeReference ... Name : p1 IsIn : True Attributes : IsIn, IsBasetype Type : FC_HYPER - NdrSimpleTypeReference ... Name : p2 IsIn : True Attributes : MustSize, MustFree, IsOut Type : FC_RP - NdrPointerTypeReference ... Name : p3 IsIn : False

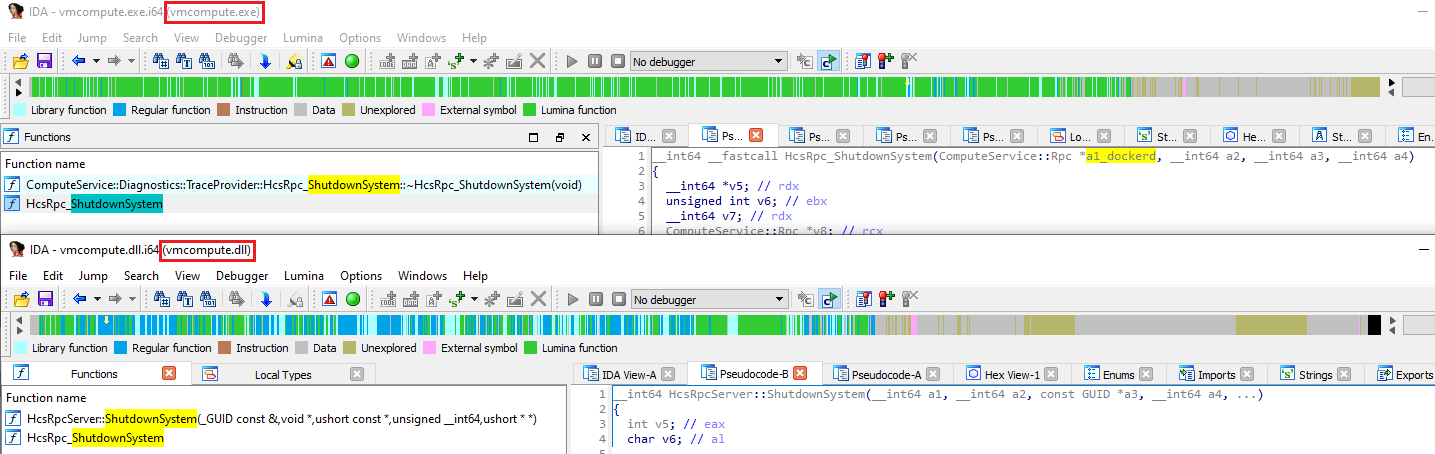

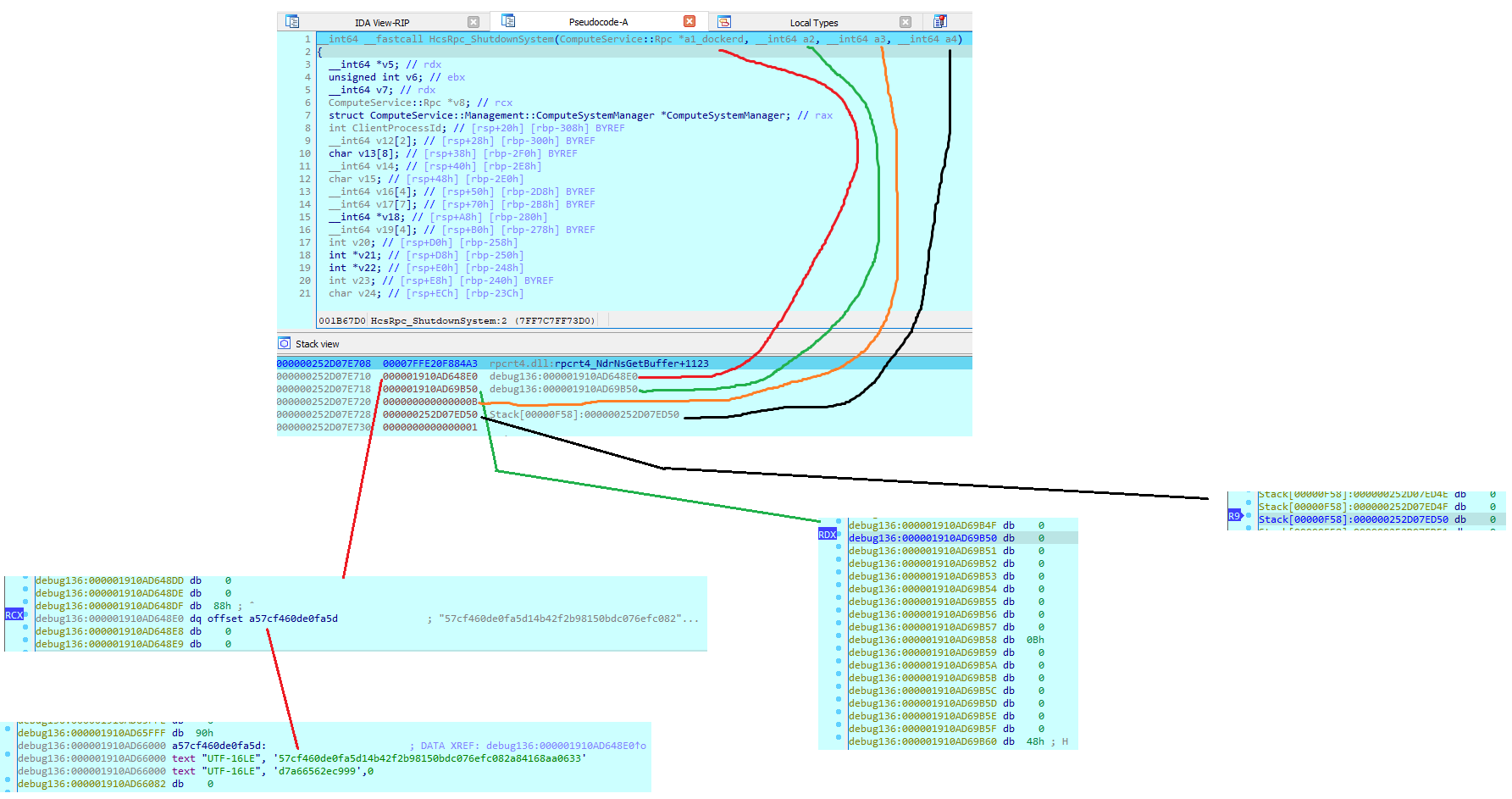

Only p0, p1 and p2 are input parameters. If you call the client function with more than three arguments, you will receive an error about too many parameters. Notice that if you reverse vmcompute.exe and vmcompute.dll, you will see that both functions receive four parameters (Figure 15):

Figure 15 – Comparing HcsRpc_ShutdownSystem in IDA through Vmcompute.exe and vmcompute.dll

Forshaw explained to me what the difference is; you can read about it here:

“When you want to do output parameter in PS, you need to create the variable and then use the special [ref] type, this is just a pain. So instead, for PS, I create a return structure that will hold the output values and the return code and remove that parameter from the function’s argument list.”

I debugged the service to understand what they should contain (see Figure 16 for the flow).

Figure 17 – Debugging parameters

The first parameter seems to be a pointer to an address that contains the container ID as a string. The second and the fourth were pointing to zero, and the third contained a number (0xB) that was different when I called the function again.

In the beginning, I tried to call the function like that:

PS > $c.HcsRpc_ShutdownSystem("57cf460de0fa5d14b42f2b98150bdc076efc082a84168aa0633d7a66562ec999",0,0)

Cannot convert argument "p0", with value: "57cf460de0fa5d14b42f2b98150bdc076efc082a84168aa0633d7a66562ec999", for "HcsRpc_ShutdownSystem" to type

"NtApiDotNet.Ndr.Marshal.NdrContextHandle": "Cannot convert the "57cf460de0fa5d14b42f2b98150bdc076efc082a84168aa0633d7a66562ec999" value of type

"System.String" to type "NtApiDotNet.Ndr.Marshal.NdrContextHandle"."

At line:1 char:1

+ $c.HcsRpc_ShutdownSystem("57cf460de0fa5d14b42f2b98150bdc076efc082a841 ...

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : NotSpecified: (:) [], MethodException

+ FullyQualifiedErrorId : MethodArgumentConversionInvalidCastArgument

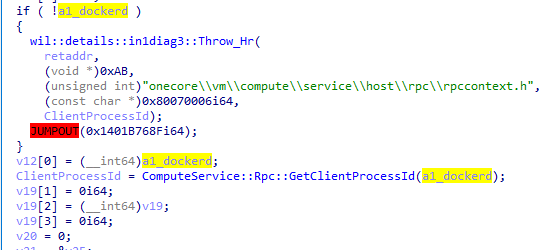

It didn’t work. Maybe we needed to call a pointer in the first argument, I wasn’t sure. I decided to see what the purpose of the first parameter is. I noticed it (a1_dockerd) was being passed to GetClientProcessId

(Figure 17):

Figure 18 – Debugging

HcsRpc_ShutdownSystem in vmcompute.exe Inside that function, it will retrieve the dockerd PID:

__int64 __fastcall ComputeService::Rpc::GetClientProcessId(ComputeService::Rpc *this)

{

unsigned int v1; // ebx

int RpcCallAttributes[32]; // [rsp+20h] [rbp-98h] BYREF

v1 = -1;

memset_0(RpcCallAttributes, 0, 0x78ui64);

RpcCallAttributes[1] = 16;

RpcCallAttributes[0] = 3;

if ( !RpcServerInqCallAttributesW(0i64, RpcCallAttributes) )

return (unsigned int)RpcCallAttributes[16];

return v1;

}

IDA represented it as ComputeService::Rpc *this, but it didn’t have any information about it. I decided to try to call the function again, but with some sort of pointer from the class System.Guid, and received an “Access is Denied”:

$d = [NtApiDotNet.Ndr.Marshal.NdrContextHandle]::new(0, [system.guid]::NewGuid())

PS C:\Users\Administrator> $c.HcsRpc_ShutdownSystem($d,0,0)

Exception calling "HcsRpc_ShutdownSystem" with "3" argument(s): "(0x80070005) - Access is denied."

At line:1 char:1

+ $c.HcsRpc_ShutdownSystem($d,0,0)

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : NotSpecified: (:) [], MethodInvocationException

+ FullyQualifiedErrorId : RpcFaultException

Unfortunately, there is a permission check so you can’t call the function from a low privileged user. If we were able, it would tell a different story and we would need to investigate more about the structure of the parameters.

Summary

In this research, we learned about the process behind the scenes when accessing containers. Learning about the communication between all the processes involved in creating a container could help us think about new relevant attack vectors. To help us with the learning of this subject, we created a new tool, RPCMon, to monitor RPC communication and help in other future research projects related to RPC.

References

- Linux Container on Windows 10

- Isolation modes

- Docker Desktop research

- Container Platform Architecture

- Windows Container Network

- “Who Contains Containers” – An article by James Forshaw about Windows Containers

- An article by CheckPoint about Windows Sandbox, there is an information about vmcompute

- Windows Internals 7 Part 1, pages 183, 190

- Windows Container Security lecture from DockerCon 2018

- Explanation about LCOW vs Linux VM on Windows

- Explanation about the different between Docker for Windows and Docker on Windows