Artificial intelligence (AI) is transforming modern society at unprecedented speed. It can do your homework, help you make better investment decisions, turn your selfie into a Renaissance painting or write code on your behalf. While ChatGPT and other generative AI tools can be powerful forces for good, they’ve also unleashed a tsunami of attacker innovation and concerns are mounting quickly. In the last week alone, several government officials and AI leaders have issued warnings about the growing AI threat:

- The Center for AI Safety released this one-sentence statement signed by more than 350 prominent AI figures: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

- The head of the U.S. Federal Trade Commission emphasized the need for vigilance, noting that the FTC is already seeing AI used to “turbocharge” fraud and scams.

- A panel of United Nations experts called for “greater transparency, oversight and regulation to address the negative impacts of new and emerging digital tools and online spaces on human rights.”

- The EU urged tech firms to “clearly label” any services with a potential to disseminate AI-generated disinformation.

As part of the broader cybersecurity research community, CyberArk Labs is focused on this evolving threat landscape to better understand what emerging AI attack vectors mean for identity security programs and help shape new defensive innovations.

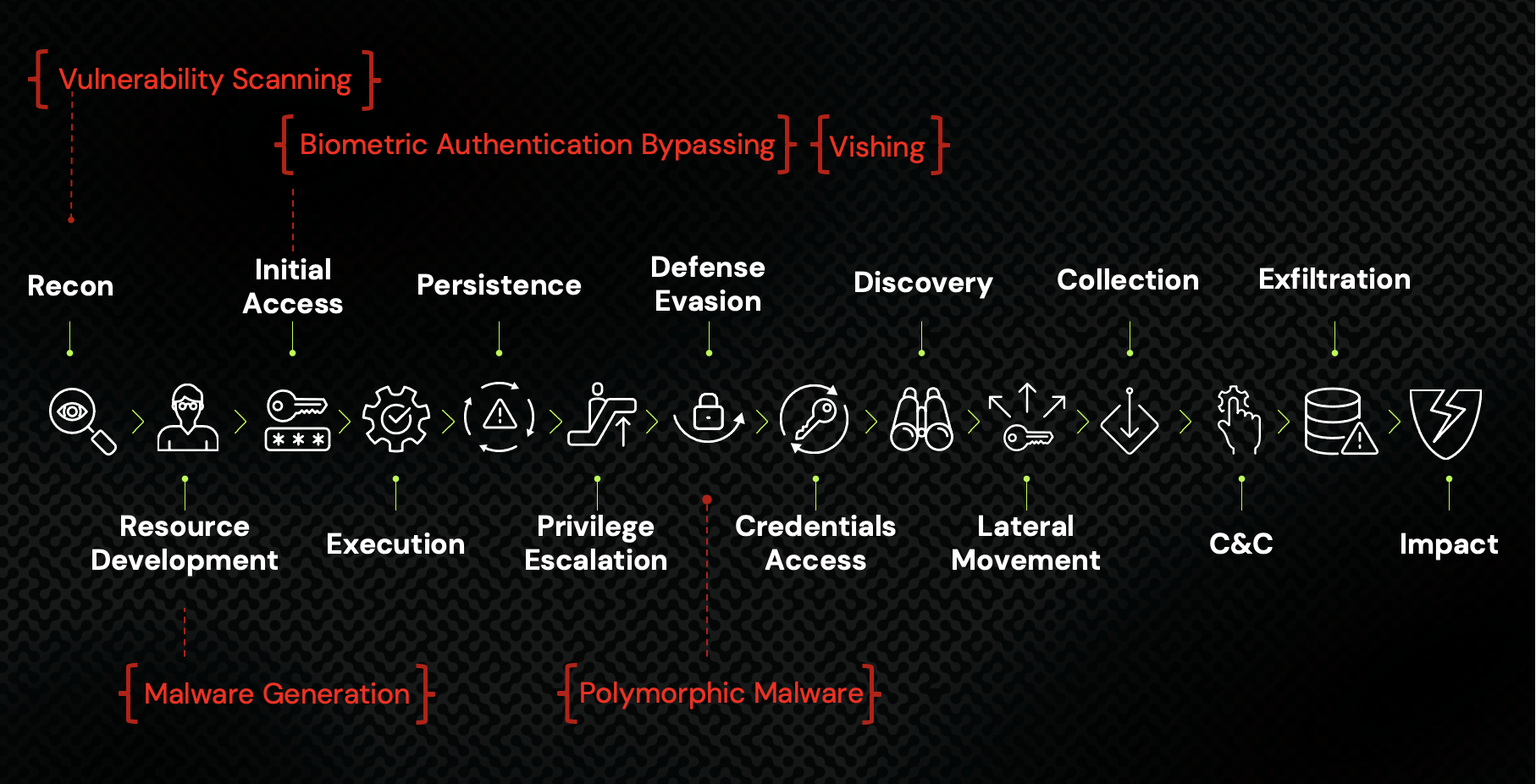

I presented highlights from our latest threat research at CyberArk IMPACT23 in Boston, including the three attack scenarios highlighted below. As you’ll see, AI is changing the offensive game in several ways, but not all of them. Nearly every attack continues to follow a familiar progression, as outlined in the MITRE ATT&CK Matrix for Enterprise illustrated here, leaning heavily on identity compromise and high-sensitivity access:

A common attack chain following 14 tactics defined by the MITRE ATT&CK Matrix for Enterprise

Offensive AI Scenario 1: Vishing

As a conscientious employee, you’ve become very skeptical of phishing emails and know what to look out for. But imagine you’re sitting at your desk and receive a WhatsApp message from the CEO of your company asking you to transfer money right away. You see his avatar and hear his distinct voice on the message. It’s a little strange since you’ve never communicated with him this way, but you ask him where you should send the funds and he responds immediately with all the necessary details. It must be him, right?

Not so fast. AI text-to-speech models make it easy for attackers to mine publicly available information, such as CEO media interviews, and use it to impersonate company executives, celebrities and even U.S. presidents. By building trust with their target, attackers can obtain access to credentials and other sensitive information. Now imagine how such a vishing campaign could be done at scale using automated, real-time generation of text and text-to-voice models.

Such AI-based deep fakes are already commonplace and can be very difficult to spot. AI experts predict that AI-generated content will eventually be indistinguishable from human-created content – which is troubling for cybersecurity professionals and everyone else.

Offensive AI Scenario 2: Biometric Authentication

Now let’s switch gears from attacks on the ears to attacks on the eyes. Facial recognition is a popular biometric authentication option for accessing devices and infrastructure. It can also be duped by threat actors using generative AI to compromise identities and gain an initial foothold in an environment.

In one remarkable study, threat researchers at Tel Aviv University created a “master face” (or “master key”) that could be used to bypass most facial recognition systems. They used an AI model called GANs – or Generative Adversarial Networks – to iteratively test and optimize a vector image to match facial images housed in a large, open repository. Their research produced a set of nine images that matched more than 60% of the faces in the database, meaning an attacker would have a 60% chance of successfully bypassing facial recognition authentication to compromise an identity.

Our CyberArk Labs team built on this theoretical research to demonstrate how attackers could actually bypass facial recognition authentication. Check it out:

CyberArk Labs demonstrates an attack using generative AI to bypass facial recognition authentication

Generative AI models have been around for years – so why so much buzz right now? In a word, scale. Today’s models can learn at incredible scale. Consider that ChatGPT-2 could digest “just” 3 billion parameters (variables in an AI system whose values are adjusted to establish how input data gets transformed into the desired output). The latest version, GPT-3, can digest 100x more parameters than that. This exponential growth in parameters is directly connected to advances in cloud computing and new, perimeter-less environments that define our digital era. As AI models continue to learn, they’ll continue to get better at creating realistic deep fakes, malware and other risky threats that will change the landscape.

Offensive AI Scenario 3: Polymorphic Malware

Many researchers and developers are experimenting with generative AI to write all kinds of code – including malware. For offensive campaigns, AI can be incredibly effective during the earliest stages of the attack chain, such as reconnaissance, malware development and initial access. However, it is not yet clear that AI TTPs (tactics, techniques and procedures) are useful after an attacker is inside and working to escalate privileges, access credentials and move laterally. These three stages all hinge on identity compromise, underscoring the importance of robust intelligent identity security controls in protecting critical systems and data.

Based on our research, CyberArk Labs sees a new AI-fueled technique that could be categorized under MITRE’s defense evasion stage: polymorphic malware and continue to analyze this area closely.

Offensive AI techniques mapped to the MITRE ATT&CK Matrix for Enterprise

Polymorphic malware mutates its implementation while keeping its original functionality intact. Until recently, malware was defined as “polymorphic” if it changed how it encrypted its various modules (this makes it especially difficult for defenders to identify the malware). After generative AI introduced the possibility of mutating or generating code modules with different implementations, our team quickly began experimenting with ChatGPT to create polymorphic malware. Not because it’s a great way to create code (it’s not), but because it’s so accessible and fun …

We asked ChatGPT to generate an info-stealer – a type of malware that fetches session cookies once it’s executed on disk. You can check out all the code and technical details in our Threat Research blog post, “Chatting Our Way Into Creating a Polymorphic Malware,” but here are our high-level takeaways: ChatGPT is an enthusiastic, yet naïve, developer. It writes code quickly but misses critical details and context. During our experiment, the chatbot created a hard-coded password and tried to use it to decrypt session cookies – a huge security red flag that made us wonder where it learned this risky (and unfortunately, very common) practice.

Ultimately, we found that defense evasion using AI-generated polymorphic malware is viable. For example, an attacker could use ChatGPT to generate (and continuously mutate) information-stealing code for injection. By infecting an endpoint device and targeting identities – locally stored session cookies, in our research – they could impersonate the device user, bypass security defenses and access target systems while staying under the radar. As AI models improve and attackers continue to innovate, automated identity-based attacks like these will become part of malware operations.

Countering Attack Innovation with AI-Powered Defense

We can take away three things from these attack scenarios:

- AI already has and will continue to impact the threat landscape in many ways – from how security weaknesses are found to how malicious code is developed. It presents threat actors with new opportunities to target identities and even bypass authentication mechanisms. And it won’t take long for AI to hone its skills.

- Identities remain prime attack targets. Identity compromise continues to be the most effective and efficient way for attackers to move through environments and reach sensitive systems and data.

- Malware-agnostic defenses are even more critical. Security layers must evolve to not only quarantine malicious activity, but also enforce preventive practices, such as least privilege access or conditional access to local resources (like cookie stores) and network resources (like web applications).

As our AI-focused threat research continues, it’s important to remember that AI is also an incredibly powerful tool for cyber defenders. It will be key to countering change in the threat landscape, improving agility and helping organizations stay one step ahead of attackers. It also represents a promising new chapter where cybersecurity deployments are simpler, highly automated and more impactful. By harnessing AI to optimize security boundaries where they’re needed most – around human and non-human identities – organizations can effectively mitigate threats today and in the future.

Editor’s note: To learn more about the ever-expanding attack surface, attacker techniques and trends in identity-based cyberattacks, join CyberArk on the Impact World Tour in a city near you.

Lavi Lazarovitz is vice president of cyber research at CyberArk Labs.