A Brief History of the Zero Trust Model

Established back in 2010 by industry analyst John Kindervag, the “Zero Trust model” is centered on the belief that organizations should not automatically trust anything inside or outside its perimeters; instead, it must verify anything and everything trying to connect to its systems before granting access. To quote the infamous Marxist dictator Joseph Stalin, “I trust no one, not even myself.” Essentially, the same rules apply to this concept.

In the wake of the U.S. Office of Personnel Management (OPM) breach, the House of Representatives strongly recommended government agencies adopt a Zero Trust framework to protect their most sensitive networks from similar attacks. Market research shows Zero Trust models, and the technologies that support them, are becoming more mainstream and readily adopted by enterprise-level organizations worldwide. When organizations like Google create and implement their own flavor of Zero Trust, BeyondCorp, people start to pay attention.

This two-part blog will first focus on how perimeter security has changed over time and how the importance of securing privileged access has increased in line with this change. The second installment will highlight five critical considerations for modern architectures.

The (D)evolution of the Perimeter

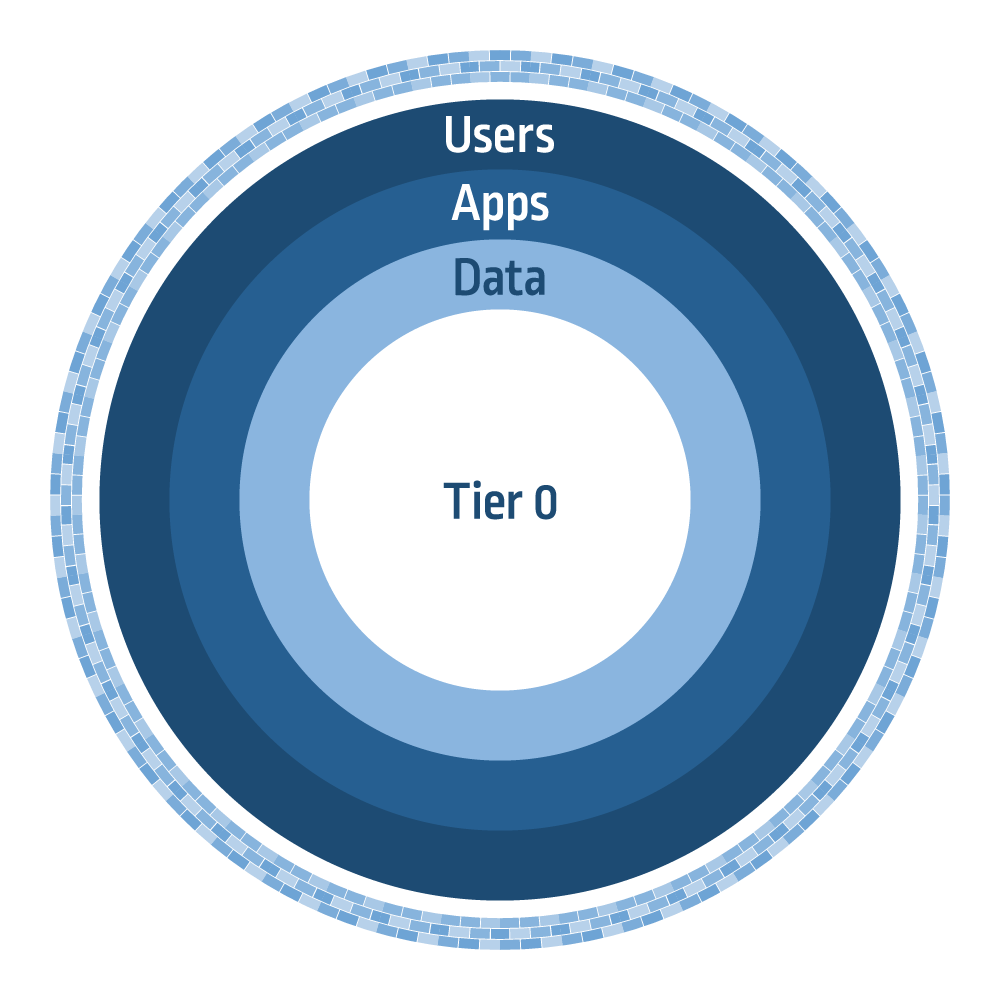

In the beginning, maintaining a high-level of protection from cyber threats was very much focused on securing the perimeter. This was the golden age of firewalls, VPNs and DMZs (Figure 1.) Trust was, essentially, established and defined by the perimeter. At this point in time, the lifeblood of the company existed almost exclusively within the physical walls of the organization. The belief was that if you’re connected to the network, then you are trusted. If you’re an employee of the company, then you can’t go rogue. In these early days, organizations focused on perimeter security to prevent things like network intrusion, malware, phishing, denial of service and zero day attacks. This is all well and good, but this traditional “Tootsie Pop” model (remember those commercials?) featured a strong exterior where the focus was placed almost exclusively on the perceived threat from the outside, but completely ignored the soft, chocolaty goodness directly in the middle.

Over time, we ultimately punctured this traditional security bubble – effectively bringing the problems inside, which meant redefining our sense of trust and appropriate controls. First, users transformed into digital nomads, abandoning their corporate or branch locations to become fully remote employees. We then opened up our networks to consumers so that they could use our applications, leading to extranets and moving another layer of the concentric circles in Figure 1 to the outside. For example, with VPN connections, organizations often allow half of the traffic to go directly outside and half through the VPN to access the organization’s applications. This VPN connection inherently trusts the user’s machine and, by connecting to those machines, potentially subjects itself to malware, which can then spread itself through the network.

Next, the adoption of SaaS applications took hold as businesses looked to scale their applications across multiple end user PCs, without filling up too much of the (at the time) expensive hardware and storage space.

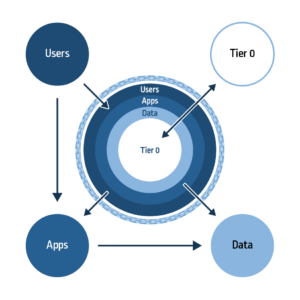

Naturally, then we find our data and workloads moving to the cloud. Our files, documents and emails are on Office365 and our usage of public data sources (and within SaaS) increases. Lastly, infrastructure extends to hybrid architectures via Amazon Web Services (AWS), Microsoft Azure and Google Cloud Platform, which is how many organizations are architected today (Figure 2).

Throughout this evolutionary process, the perimeter had become so fluid and dynamic in nature that the boundaries, in the traditional sense, ultimately disappeared. The perimeter is no longer a static entity; it’s been made permeable by the disruption of things like cloud, digital transformation, the Internet of Things (IoT), mobile access and an increasingly geo-distributed workforce.

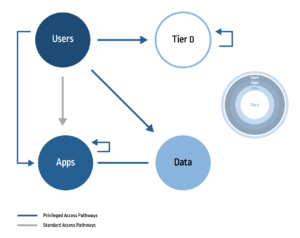

Within these modernized architectures and with an expanded attack surface, the privileged access pathway creates significantly more risk (Figure 3). Let’s differentiate the two access pathways: the standard user ultimately has very basic, low-level access limited to just the application layer; the privileged user, essentially, has unfettered access to the application layer, sensitive data and the mission critical Tier 0 assets. This inherently carries a much higher-level of risk if left unmanaged and unsecured, given the decentralized nature of this model.

Zero Trust is not just limited to human users, but to non-human users as well, e.g. applications interacting with operating systems via service accounts and business (and robotic) automation processes where software bots are connecting, storing and accessing sensitive data and applications. As the security layers slip through the fingers of the organization, securing important data becomes a much bigger challenge.

Let’s highlight a standardized workflow for the privileged user. Let’s assume a privileged user is working abroad while on a business trip and he or she is trying to configure and run a cloud service hosted in an AWS instance, which requires pulling a database running on a Unix box racked in an existing mainframe. Ensuring that there’s a consistent point of trust throughout this workflow is a challenge that needs to be addressed; the user remotely connects to different islands of systems, databases and applications (both on-premises and in the cloud) creating many potential points of entry for an attacker.

This evolutionary process of the security model not only aligns to the basis by which the principles of Zero Trust were established, it reaffirms the importance of securing privileged access. The foundation of Zero Trust is, arguably, the same as the foundation for why CyberArk and the privileged management space exists. Now, while most enterprises may not be willing to fully abandon firewalls and perimeter security, they, certainly, should be placing their focus on tightening security from the inside to mitigate risk of an advanced attack.

In the next installment, we discuss the evolution of trust and make recommendations for building out modern architectures that align to Zero Trust frameworks.

To learn more, watch the Implementing Privileged Access Security into Zero Trust Models and Architectures webinar.

Continue reading in part 2.